Q1. What Are the Core Challenges of Answer Engine Optimization in 2025? [toc=Core AEO Challenges]

The evolution from traditional search to AI-driven answer engines represents more than an algorithm update - it's a complete paradigm shift in how information gets discovered and consumed online. Google's keyword-based SEO dominated for two decades, but platforms like ChatGPT, Perplexity, Claude, and Google's AI Overviews have fundamentally rewritten the rules. By 2028, over 50% of search traffic will move from traditional engines to AI-native platforms, making Answer Engine Optimization (AEO) a mission-critical survival strategy for modern businesses.

⚠️ Why Traditional SEO Agencies Fail at AEO

Most legacy SEO agencies misunderstand AEO by treating it as "SEO 2.0" - applying outdated playbooks that simply don't work with Large Language Models (LLMs). Keyword stuffing, link schemes, and mass-generated content yield little to no improvement in generative engine optimization visibility because LLMs understand semantic meaning and query intent, not keyword density. Traditional agencies remain trapped in the commoditization cycle, obsessed with vanity metrics like impressions and page views while lacking specialized expertise in multi-platform optimization.

"There aren't any confirmed AEO tactics, LLMs and Google haven't shared their exact algorithms."

u/AgenticSEO, r/AgenticSEO Reddit Thread

The problem runs deeper than tactics: legacy agencies still measure success by traffic volume and rankings - metrics becoming obsolete in the zero-click era. When 60%+ of queries now return zero-click results, chasing page views becomes theater.

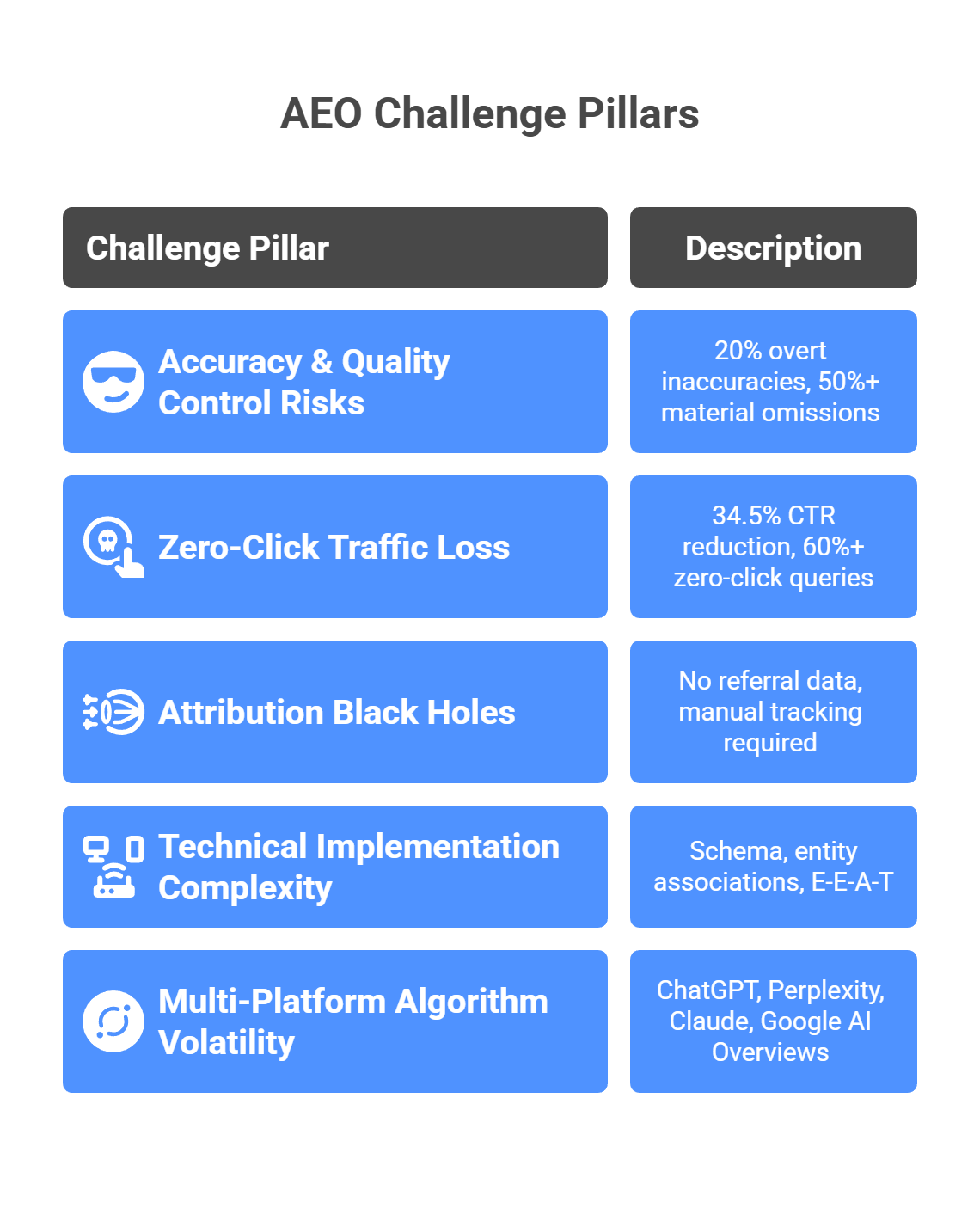

✅ The Five Core Challenge Pillars in AI Search

Answer Engine Optimization presents five distinct challenge categories that traditional SEO never faced:

1. Accuracy and Quality Control Risks - LLMs don't "know" facts; they predict text patterns. Studies show 20% overt inaccuracy rates and 50%+ material omissions in AI-generated content. When ChatGPT misquotes your content or generates hallucinated "facts" attributed to your brand, you lose control of your message - a brand reputation risk traditional SEO never posed.

2. Zero-Click Traffic Loss and Attribution Black Holes - AI Overviews reduce organic click-through rates by 34.5%, with Gartner predicting a 25% decline in traditional search engine usage by 2026. Success shifts from driving traffic to earning citations, but tracking which AI mentions influenced your pipeline becomes nearly impossible without specialized infrastructure.

"The real challenge is measuring context and positioning, not just whether you got cited."

u/DigitalMarketing, r/DigitalMarketing Reddit Thread

3. Technical Implementation Complexity - AEO requires trust engineering, not just technical optimization. Entity associations, E-E-A-T signals, structured data, and schema markup must work together to make your brand a "go-to source" for AI platforms. This goes far beyond traditional technical SEO's focus on page speed and crawlability.

4. Timeline and Resource Intensity - AEO demands 6-12 months to show measurable results versus traditional SEO's 3-6 month timeline. Trust signal accumulation (backlinks, entity associations, E-E-A-T) takes time, and LLM knowledge cutoffs mean new content isn't immediately indexed. Resource requirements also exceed SEO - mandatory SME involvement, multi-platform optimization, and manual tracking all increase operational overhead.

5. Multi-Platform Algorithm Volatility - Unlike Google's relatively transparent guidelines, each AI platform (ChatGPT, Perplexity, Claude, Google AI Overviews) operates with distinct, opaque algorithms that change without notice. The same question asked in an LLM can yield different answers each time ("Track By Run" volatility), making consistent benchmarking nearly impossible.

💰 MaximusLabs' Trust-First Citation Engineering Solution

MaximusLabs addresses all five challenge pillars simultaneously through a fundamentally different approach: Trust-First Citation Engineering rather than algorithm manipulation. Where traditional agencies chase rankings, we engineer citation authority by:

E-E-A-T Integration - Mandatory Subject Matter Expert (SME) involvement in every content workflow ensures authentic first-hand experience that generic AI cannot replicate. This prevents the "authenticity deficit" causing algorithmic trust loss for mass AI content.

Earned AEO Strategies - Strategic acquisition of high-authority backlinks and third-party mentions (Reddit, G2, industry listicles) ensures your brand gets repeatedly cited by LLMs. This works especially well for early-stage SaaS companies seeking quick wins without waiting years for domain authority.

Search Everywhere Optimization - Building reputation beyond your website across review sites, community platforms, and industry mentions. AI engines build a 360-degree view of your brand, making off-site signals crucial.

Revenue-First Content Prioritization - Focus on Bottom-of-the-Funnel (BOFU) and Middle-of-the-Funnel (MOFU) content aligned with your Ideal Customer Profile (ICP) to influence pipeline, not chase vanity traffic. Data shows LLM traffic converts 6x better than traditional organic search.

Realistic Timeline Planning - Transparent 6-12 month expectations with milestone-based progress tracking using leading indicators (citation frequency) rather than lagging metrics (traffic) that mislead stakeholders.

This contrasts sharply with tool-obsessed competitors who only track visibility without solving root problems. Over 60 commodity AEO tracking tools exist, but experts predict mass churn as incumbents like Ahrefs and Semrush quickly copy these basic features.

🎯 Why This Isn't SEO 2.0 - It's a Different Discipline

"If your company is not on that list [AI's curated answer], then you're not in the buying conversation at all... you're invisible."

- Industry Expert

Generative Engine Optimization (GEO) methods can boost visibility by up to 40% in AI responses. The best-performing individual tactics include Quotation Addition and Statistics Addition, with combined methods (Fluency Optimization + Statistics) outperforming any single strategy. But tactics without strategy fail - the fundamental shift requires engineering trust across the entire web, not manipulating a single algorithm.

Traditional SEO asked: "How do we rank #1 on Google?" AEO asks: "How do we become the authoritative source AI engines cite?" That's not an evolution - it's a revolution requiring specialized partners who understand the new paradigm. Stop optimizing for Google. Start optimizing for trust.

Q2. Why Is Content Accuracy the #1 AEO Risk (And How to Control It)? [toc=Content Accuracy Risks]

The accuracy paradox defines modern AI search: the technology meant to provide instant, reliable answers often delivers confident misinformation instead. Large Language Models don't contain material knowledge - they're trained on vast data and merely predict text patterns based on probability, not truth. This fundamental mechanism creates brand reputation risks that traditional SEO never posed, because when AI gets your facts wrong, you don't control the correction.

⚠️ The Hallucination Crisis in AI-Generated Content

.png)

Studies reveal sobering statistics: while ChatGPT scores highly overall, 20% of generated content still contains overt inaccuracies, and over 50% has material omissions. This isn't a minor bug - it's a fundamental limitation of how LLMs operate. They don't "verify" information against a knowledge base; they statistically predict the most likely next word based on training data patterns.

"We used to treat AEO removals as bannable offenses because of the fact that we figured since our reports weren't getting attention, anything that was removed proactively by AEO had to be serious. Now we know better."

u/SaaS, r/SaaS Reddit Thread

The legal and compliance risks escalate dramatically in regulated industries. Copyright and plagiarism lawsuits are currently pending against LLM providers, with fundamental questions about whether AI-generated content that mimics writing styles constitutes infringement. For healthcare, finance, and legal brands, a single AI hallucination attributing false information to your company could trigger regulatory scrutiny or damage trust that took decades to build.

❌ The Authenticity Deficit: How Agencies Create the Problem

Most traditional agencies have accelerated the crisis by outsourcing content production to AI writers without human Subject Matter Expert (SME) oversight. This creates what industry experts call the "authenticity deficit" - generic AI content lacks first-hand experience, the critical "E" in Google's E-E-A-T (Experience, Expertise, Authoritativeness, Trustworthiness) framework.

AI cannot provide verifiable real-world application or unique human insight. Content created solely by AI gets labeled as low-value, generic material, leading to algorithmic trust loss that can take years to recover from - possibly never fully regaining trust. Legacy agencies treat AI as a content factory to maximize output and margins, rather than as a strategic assistant requiring human engineering.

"Schema doesnt make you rank better - this is a GEO myth."

u/SEO, r/SEO Reddit Thread

The problem compounds when agencies apply outdated quantity-over-quality thinking. The 2007 programmatic SEO era's mass auto-generated pages strategy worked briefly until Google introduced Panda and similar algorithms. History repeats: search providers must now combat generic AI content degrading search utility. If all results become ChatGPT derivatives regurgitating the same information, platforms lose their value proposition.

💡 When AI Gets It Wrong: Brand Impact You Can't Control

Traditional SEO's worst-case scenario was ranking for the wrong keyword. AEO's nightmare scenario is far worse: when AI misquotes your content or generates hallucinated "facts" attributed to your brand, you lose control of your message.

In regulated industries, this creates existential risks. Imagine a healthcare AI citing incorrect dosage information "according to [Your Medical Company]" - the liability exposure alone justifies treating accuracy as Priority #1. Yet quality control becomes external: you don't control ChatGPT's output, Perplexity's synthesis, or Claude's interpretation. You can only influence what you publish and hope LLMs extract it accurately.

"To me, it feels like AEO is nearly completely incapable of seeing context."

u/DigitalMarketing, r/DigitalMarketing Reddit Thread

Higher content standards are now table stakes, not differentiators. Every competitor can access the same AI tools, making proprietary data, first-hand experience, and verifiable expertise the only sustainable moats. Trustworthiness has become "the foundational and most critical component of the E-E-A-T framework" - without user trust, content has zero value regardless of optimization.

✅ MaximusLabs' E-E-A-T Guarantee Methodology

MaximusLabs eliminates the accuracy crisis through our E-E-A-T Guarantee methodology that treats AI as a "junior associate" requiring senior human oversight, not an autonomous content creator:

Mandatory SME Integration - Every piece of content involves Subject Matter Experts from your team or industry. This ensures authentic first-hand experience (the "E" in E-E-A-T) that generic AI cannot replicate. Real practitioners provide unique insights, proprietary data, and verifiable expertise that builds algorithmic trust.

Proprietary Data Integration - Incorporating client-specific research, case studies, original datasets, and first-party insights that no competitor's AI can access. This creates information gain - the key ranking factor for LLMs that prioritize "did you say something unique?" over keyword matching.

Verification Protocols - Multi-layer human review processes prevent algorithmic penalties. Before publication, content undergoes fact-checking, source verification, E-E-A-T scoring, and compliance review appropriate to your industry. This catches hallucinations and omissions that automated tools miss.

Accuracy Monitoring - Post-publication tracking of how AI platforms cite your content, with rapid response protocols when misquotes or inaccuracies appear. While you can't control LLM output, you can monitor citations and request corrections through platform-specific channels.

This contrasts sharply with agencies that rely on templated AI output, generic research, and minimal human oversight. The cost difference is real - human expertise costs more than AI automation - but the alternative is algorithmic trust loss that destroys years of SEO investment in months.

📉 Case Study: The Cost of Improper AI Content Use

Multiple companies have experienced major traffic drops from improper AI content use, with recovery timelines extending years. When search engines (and now AI platforms) detect mass AI-generated content lacking unique value, they apply quality penalties that compound over time.

The pattern follows Google's historical Panda algorithm impact: sites producing low-quality content at scale saw 50-90% traffic declines, with many never recovering. AI platforms face the same incentive structure - they must maintain answer quality or users will switch to competitors. This means penalizing sources that provide generic, unreliable, or hallucinated information.

Prevention strategies center on one principle: make it impossible for an LLM to mistake your content for generic AI output. First-hand experience, proprietary data, verifiable credentials, cited sources, and unique perspectives all signal authentic human expertise rather than AI regurgitation. The "Purple Cow Principle" applies: to thrive, content must be remarkable - like a giant purple cow in a field of boring brown cows. In the AI era, genuine human experience becomes the mandatory element of remarkable differentiation.

Stop treating AI as a content factory. Start treating it as a research assistant that helps human experts work faster - but never replaces their judgment, insight, or accountability. That's the only sustainable path to accuracy in the age of hallucinations. Learn more about our AI SEO approach.

Q3. The Zero-Click Problem: When Visibility Doesn't Equal Traffic [toc=Zero-Click Problem]

The zero-click revolution has transformed search's fundamental value proposition: being seen no longer means being visited. AI Overviews, featured snippets, and LLM summaries provide complete answers directly on the search results page, eliminating the need for users to click through to your website. This isn't a temporary trend - it's the new normal, with 34.5% organic CTR reduction documented when AI Overviews appear, and some verticals seeing 60%+ zero-click rates.

⏰ The Zero-Click Surge: Data That Can't Be Ignored

Gartner predicts a 25% decline in traditional search engine usage by 2026 due to generative AI. ChatGPT visitors were up 80% since April in recent reports, signaling massive user behavior shifts toward AI-first search experiences. Google's Search Generative Experience (SGE) pushes traditional organic results further down the page, with AI summaries occupying prime real estate that used to drive traffic.

"Most people are still doing manual checks because the tooling is pretty limited right now."

u/AskMarketing, r/AskMarketing Reddit Thread

Success metrics have fundamentally shifted from rankings and traffic to "Share of Answers" and citation authority. SEO asked "What position do we rank?" AEO asks "Which brands does the AI cite?" If your company isn't on that curated list of 1-3 sources, you're invisible in the buying conversation - even if you technically "rank" in traditional search results.

The business impact manifests across key metrics: reduced website sessions, lower engagement rates, and GA4 dashboards showing traffic declines despite growing brand visibility. CMOs face a paradox: their content appears in more AI answers than ever, yet website analytics suggest declining performance. Traditional attribution models break when the customer journey no longer includes a measurable click.

❌ Why Legacy Agencies Measure the Wrong Metrics

Traditional SEO agencies measure success by traffic volume and rankings - metrics becoming obsolete in the zero-click era. Their inability to quantify zero-click value leads to "AEO doesn't work" conclusions when traffic fails to materialize, causing premature abandonment of otherwise successful strategies.

"Some tools, while extremely expensive, were not really worth it or the ROI was not justifiable internally."

u/GrowthHacking, r/GrowthHacking Reddit Thread

Legacy agencies lack frameworks to monetize visibility without clicks, treating citations as vanity metrics rather than business impact indicators. They report "brand mention frequency" without tying it to revenue, leaving executives questioning the investment. When asked "What's the ROI?" they default to indirect metrics like "brand awareness lift" that don't satisfy CFOs demanding closed-loop attribution.

The problem stems from tool obsession over strategic thinking. Over 60 commodity AEO tracking tools exist, but most perform the same basic task: monitoring brand appearances across AI platforms. These tools answer "Are we being cited?" but not "Does it matter?" or "How much pipeline did it influence?" Without connecting visibility to business outcomes, agencies lose budget battles to channels with clearer ROI stories.

💸 Understanding Business Impact: Beyond Sessions and Clicks

Zero-click search fundamentally changes how to quantify marketing value. Traditional traffic-based ROI calculations measured sessions × conversion rate × average deal value. This formula breaks when sessions approach zero despite growing influence on purchase decisions.

The new model requires quantifying brand awareness value, authority building, and pipeline influence separate from direct attribution. Research shows citation presence in AI answers influences 40% of closed deals despite generating zero direct traffic from AI engines. Buyers research using ChatGPT or Perplexity, see your brand cited as an authority, then visit your site directly (appearing as "direct traffic" in analytics) or search your brand name specifically.

"The truth is that AEO is so new, companies are still figuring out best practices."

u/DigitalMarketing, r/DigitalMarketing Reddit Thread

Conversion value differences also matter: LLM traffic converts 6x better when it does click compared to traditional organic search traffic. AI platforms pre-qualify users by providing comprehensive answers upfront. Users who click through after reading an AI summary demonstrate higher intent, having already educated themselves on basics and seeking deeper engagement or conversion.

The shift from transaction metrics to influence metrics requires new measurement frameworks that legacy agencies haven't developed. It's not enough to count citations - you must score citation quality, context, positioning, and co-mentions to estimate business impact.

✅ MaximusLabs' Zero-Click Value Quantification Framework

MaximusLabs reframes success around "Share of Answers" rather than "Share of Voice", measuring what matters in the zero-click era:

Citation Frequency Tracking - Monitoring how often your brand appears in AI responses across platforms (ChatGPT, Perplexity, Claude, Google AI Overviews) for target queries in your ICP's buying journey. Frequency indicates growing authority but requires context analysis to assess value.

Citation Position Scoring - Not all citations equal value. Being the primary source cited first carries more weight than appearing as one of five sources. Our scoring system weights citations by position, context (positive/neutral/negative framing), and prominence (direct quote vs. passing mention).

Brand Association Strength Analysis - Tracking which concepts, problems, and solutions AI platforms associate with your brand. Appearing for "best [category] solution" holds more value than generic product category mentions. Association analysis reveals whether you're building desired positioning.

Context Quality Assessment - Analyzing the surrounding context of citations to ensure favorable framing. A citation in a list of "top solutions" differs dramatically from one in a "companies facing challenges" discussion. Context determines whether visibility helps or hurts.

Pipeline Influence Modeling - Correlating citation visibility with pipeline generation using post-conversion surveys, brand search volume increases, and direct traffic lifts. This connects AEO investment to business outcomes that satisfy executive scrutiny.

💰 Converting Zero-Click Visibility into Pipeline

Visibility without clicks doesn't mean visibility without value - it requires different conversion strategies:

Lead Magnets in AI Answers - Optimizing content to include compelling offers, research downloads, or tools that AI platforms cite alongside your brand mention. This turns citations into conversion opportunities even without immediate clicks.

Brand Building for Direct Traffic - Using AI citations to build authority that drives direct website visits and branded searches. Users exposed to your brand in 3-5 AI answers develop familiarity that drives later direct navigation.

BOFU Content Prioritization - Focusing optimization efforts on Bottom-of-the-Funnel content addressing high-intent queries. When users at purchase stage see your brand cited, the influence on pipeline exceeds top-of-funnel traffic volume.

Conversion Rate Optimization - When zero-click-exposed users do visit, they demonstrate 6x higher conversion intent. Optimizing for this audience through tailored landing pages, rapid value demonstrations, and frictionless conversions maximizes limited traffic value.

The psychology of authority building through AI citations mirrors traditional public relations: being featured by "trusted sources" (which LLMs now represent) builds credibility that influences future behavior even without immediate action. Stop measuring success by clicks. Start measuring it by influence, authority, and pipeline contribution. That's the only sustainable approach when being "the answer" matters more than getting "the visit." Explore our GEO measurement framework.

Q4. The Attribution Black Hole: Why Measuring AEO ROI Is Nearly Impossible [toc=Attribution Challenges]

The attribution crisis defines AEO's greatest operational challenge: you can prove visibility, but you can't prove causation. Unlike traditional SEO where Google Search Console, GA4, and rank trackers provide clear performance data, AI platforms offer virtually no referral data, no transparent metrics, and no equivalent analytics infrastructure. The gap between visibility and provable business impact grows wider each day, leaving marketers struggling to justify investments and executives questioning budget allocation.

🔍 The Measurement Infrastructure That Doesn't Exist

Traditional SEO provided comprehensive tracking: keyword rankings, SERP positions, click-through rates, landing page performance, assisted conversions, and closed-loop ROI attribution through GA4. Every metric answered questions executives actually ask: "Which keywords drive revenue? What's our organic channel ROI? How does SEO compare to paid search?"

AEO provides none of this. LLMs don't pass referral data. Perplexity citations don't appear in analytics platforms. ChatGPT mentions leave zero digital footprints in your marketing dashboard. The only measurement options are manual audits - literally asking AI platforms the same questions repeatedly and tracking brand mentions by hand - or commodity tracking tools that monitor surface-level visibility without attribution.

"Any tool claiming to monitor your AI visibility at this stage is, in my professional opinion, misleading at best and absolute snake oil at worst."

u/AskMarketing, r/AskMarketing Reddit Thread

Over 60 AEO tracking tools exist, but experts predict these will be quickly copied and commoditized by incumbents like Ahrefs and Semrush. More importantly, they solve the wrong problem: measuring visibility frequency without connecting it to pipeline or revenue leaves the ROI question unanswered.

Personalized results create "Track By Run" volatility - the same question asked in ChatGPT or Claude yields different answers each time, making competitive benchmarking unreliable. Your competitor sees different AI responses than you do. Regional variations, personalization algorithms, and timing all influence which sources get cited, eliminating the consistent ranking positions that made SEO measurement straightforward.

❌ How Agencies Measure What Doesn't Matter

Legacy SEO agencies still measure AEO success with metrics that don't apply: impressions, clicks, rankings. They report "Your brand was mentioned 47 times this month in AI responses" without explaining whether those mentions influenced pipeline, what context framed the citations, or how positioning compared to competitors.

"Stay heavily focused on traditional SEO - thats your foundation that should not change despite the AI evolution."

u/GrowthHacking, r/GrowthHacking Reddit Thread

Their lack of specialized tracking infrastructure means reporting vanity metrics - brand mention frequency - without tying results to revenue. When executives ask "What's the ROI?" agencies deflect with indirect proxies like "brand awareness scores" or "Share of Voice" that don't satisfy finance scrutiny. This inability to answer the fundamental ROI question leads to budget cuts as executives redirect spend toward channels with clearer attribution.

Traditional agencies also treat all citations equally regardless of context or intent. A mention in a "top 10 solutions" listicle holds vastly different value than appearing in a "common problems with [category]" discussion, yet basic tracking tools don't capture this nuance. Position matters - being cited first as the primary source differs dramatically from being one of seven sources listed at the end - but most measurement ignores citation quality in favor of quantity.

📊 What You Could Track in SEO vs. What's Impossible in AEO

The attribution gap becomes stark when comparing measurement capabilities:

Manual audits replace automated dashboards. Post-conversion surveys become the primary attribution method: adding "How did you hear about us?" fields that specifically include "ChatGPT," "Perplexity," "AI search" as options. This self-reported data suffers from recall bias, incomplete responses, and attribution ambiguity when buyers used multiple channels.

Highly personalized AI results mean your internal team sees different citations than prospects do. You can't simply query ChatGPT yourself to verify visibility - geographic location, conversation history, personalization algorithms, and platform updates all influence which sources get cited. Reliable tracking requires distributed testing across locations, devices, and user profiles, dramatically increasing operational complexity.

✅ MaximusLabs' Revenue-First Attribution Methodology

MaximusLabs solves the attribution black hole through our Revenue-First AEO framework that prioritizes pipeline influence over traffic vanity metrics:

BOFU/MOFU Content Prioritization - Focusing optimization on Bottom-of-the-Funnel and Middle-of-the-Funnel content that influences buyers near purchase decisions. When AI platforms cite your content for high-intent queries, the pipeline impact exceeds traffic from generic informational queries even when clicks occur.

Post-Conversion Survey Integration - Systematically asking "How did you hear about us?" with specific AI platform options (ChatGPT, Perplexity, Claude, Google AI Overviews, other AI search). This captures self-reported attribution data that, while imperfect, provides directional insights traditional analytics miss entirely.

Brand Mention Tracking with Context Analysis - Monitoring citations across platforms while scoring context quality: Is the mention positive/neutral/negative? What position (first cited vs. fifth)? Which concepts is your brand associated with? Co-mentions with which competitors? Context transforms raw mention counts into meaningful business intelligence.

Citation Quality Scoring - Weighting citations by position, prominence (direct quote vs. passing reference), sentiment, and query intent. A citation as "the leading [category] solution" for a buyer-intent query scores higher than a generic mention in an educational answer.

Pipeline Influence Modeling - Correlating citation visibility with pipeline metrics: deal velocity changes, average contract value shifts, closed-won rates by source, and time-to-close variations. When sales teams report "prospects mentioned seeing us in ChatGPT," that qualitative signal combines with quantitative pipeline data to model AEO's contribution.

6x Conversion Value Justification - Leveraging research showing LLM traffic converts 6x better than traditional organic search. Even reduced traffic volume delivers comparable revenue when conversion rates multiply, justifying continued investment despite lower session counts.

💡 Reframing ROI for the Zero-Click Era

The financial impact of lost attribution extends beyond measurement difficulty - it creates existential budget risk. When channels can't prove ROI conclusively, they get defunded during economic downturns or budget reallocations. AEO's attribution void means marketers must reframe success around influence metrics, leading indicators, and proxy measurements that satisfy executive scrutiny despite imperfect data.

The multibillion-dollar businesses in this space won't be tracking tools - they'll be execution partners who can prove they cause significantly better outcomes despite measurement challenges. MaximusLabs bridges the attribution black hole through proprietary methodologies that connect visibility to revenue, transforming "we're being cited" into "we're driving pipeline." That's the only sustainable path when proving ROI becomes harder than generating results. Learn about calculating ROI for GEO initiatives.

Q5. What Are the Technical Implementation Barriers for AEO Success? [toc=Technical Implementation Barriers]

Answer Engine Optimization demands a fundamentally different technical foundation than traditional SEO. While legacy technical optimizations like page speed, mobile responsiveness, and clean code remain necessary, they're insufficient for AI platform visibility. AEO requires architecting trust signals at every technical layer, enabling autonomous AI agents to extract, understand, and cite your content accurately across ChatGPT, Perplexity, Claude, and Google's AI Overviews.

📋 Schema Markup and Structured Data Requirements

Schema markup transitions from "nice to have" to mission-critical in the AEO era. While some practitioners argue "schema doesn't make you rank better - this is a GEO myth", the reality is more nuanced: schema doesn't directly boost rankings, but it enables accurate AI interpretation and extraction.

"People think of schema as this magic bullet, it's not. But if you're already invested in content, PR etc you don't want anything left up to the LLMs interpretation. That's where schema shines."

u/SEO, r/SEO Reddit Thread

✅ Critical Schema Types for AEO

FAQ Schema - Structures question-answer pairs for direct AI extraction. Individual FAQ items perform better than grouped schemas.

Article Schema - Enables proper content attribution with author credentials, publish dates, and topical categorization.

Organization Schema - Establishes entity identity with official name, logo, social profiles, and contact information.

HowTo Schema - Structures instructional content for step-by-step AI reproduction.

Product/Service Schema - Details offerings with pricing, availability, and specifications for commercial queries.

The technical implementation extends beyond adding markup tags. Your structured data must accurately reflect content semantics without manipulation attempts that LLMs detect and discount.

🔗 Entity Associations and Knowledge Graph Integration

AI platforms prioritize sources with verified entity associations - connections to recognized people, organizations, concepts, and locations that validate authority. Technical AEO requires building these associations systematically through technical SEO optimization:

Author Entity Building - Real people with verifiable credentials create stronger trust signals than anonymous authors. Technical requirements include author profile pages with biographical information, social proof, publication history, and external validation through platforms like LinkedIn, Twitter, and industry directories.

"Also make sure you've got clear authorship on your content."

u/AskMarketing, r/AskMarketing Reddit Thread

External Validation Requirements - AI engines often require external validation before recommending brands by name. This means technical infrastructure supporting PR distribution, industry mentions, third-party reviews, and community platform participation.

"I'd look at press releases and getting mentioned in industry news or publications."

u/DigitalMarketing, r/DigitalMarketing Reddit Thread

Entity Disambiguation - When multiple entities share names, AI platforms need clear signals distinguishing your brand. Technical solutions include unique identifiers (Wikidata IDs, corporate registration numbers), consistent NAP (Name, Address, Phone) across all properties, and schema markup specifying sameAs relationships to authoritative profiles.

⚙️ Site Architecture and Content Accessibility

AI crawlers behave differently than traditional search bots. Technical barriers that don't affect Googlebot can completely block LLM content extraction:

JavaScript Rendering Limitations - LLMs don't execute JavaScript on webpages, creating a critical blind spot for many modern sites. Content hidden behind JavaScript frameworks, lazy-loaded sections, or dynamic rendering becomes invisible to AI platforms.

"LLMs don't index JS on webpages, so beware of that pre-render."

u/SEO, r/SEO Reddit Thread

Content Structure Optimization - AI platforms extract information more successfully from clearly structured content with semantic HTML hierarchy:

- Descriptive H2/H3 headings that function as standalone questions

- Short paragraphs (2-4 sentences) enabling clean extraction

- Bulleted/numbered lists for step-by-step processes

- Tables presenting comparative data

- Definition lists for terminology clarification

Interactive Element Simplification - Fancy UI patterns degrade AI extractability. Avoid accordions hiding content by default, rotating carousels obscuring information, modal popups requiring interaction, and tab interfaces separating related content.

"Simplify content presentation - ie no fancy accordions or rotating text."

u/SEO, r/SEO Reddit Thread

🎯 E-E-A-T Technical Integration

Google's E-E-A-T framework (Experience, Expertise, Authoritativeness, Trustworthiness) requires technical implementation beyond content quality. Technical AEO embeds trust signals in site architecture:

Technical E-E-A-T Signals:

- SSL certificates (HTTPS) and security badges

- Privacy policy and terms of service pages

- About Us pages with team credentials and company history

- Contact information with verified business addresses

- Professional email domains (not free Gmail/Yahoo accounts)

- Industry certifications and compliance badges

- Customer testimonials with attribution

- Case studies and portfolio evidence

The technical challenge involves making these signals machine-readable and verifiable. Schema markup, structured citations, and authoritative backlinks all contribute to algorithmic trust calculation.

💡 How MaximusLabs Simplifies Technical AEO Implementation

MaximusLabs eliminates technical implementation complexity through comprehensive AEO-ready site audits and systematic optimization. Our technical foundation service includes schema markup implementation across all critical content types, entity association building with author profile creation and external validation coordination, JavaScript rendering solutions ensuring AI crawler accessibility, and E-E-A-T signal integration throughout site architecture. Rather than requiring clients to navigate conflicting technical guidance, we provide turnkey solutions that work across ChatGPT, Perplexity, Google AI Overviews, and emerging platforms - future-proofed through universal trust signals that transcend individual algorithm changes.

Q6. Why Legacy SEO Tactics Fail in Generative Engine Optimization [toc=Legacy SEO Failures]

The tactics that dominated SEO for two decades have become not just ineffective but actively harmful in the age of AI search. Keyword stuffing, mass-generated pages, link schemes, and algorithm manipulation yield "little to no improvement" in generative engine visibility because Large Language Models understand semantic meaning and query intent, not keyword density patterns. What worked in 2015 can destroy your visibility in 2025 - a reality many agencies refuse to acknowledge.

❌ The Failure of Traditional Manipulation Tactics

The 2007 programmatic SEO playbook - mass auto-generated landing pages targeting every keyword variation - worked briefly until Google introduced Panda algorithms to combat scraped content degrading search utility. History repeats itself now as AI platforms face the same threat: if all results become ChatGPT derivatives regurgitating identical information, the platforms lose their value proposition.

"There aren't any confirmed AEO tactics, LLMs and Google haven't shared their exact algorithms."

u/AgenticSEO, r/AgenticSEO Reddit Thread

Legacy tactics failing in the LLM era include:

Keyword Stuffing - LLMs analyze semantic context, not keyword frequency. Content artificially packed with exact-match phrases reads as unnatural to AI, triggering quality filters rather than improving visibility.

Scraped/Duplicate Content - AI platforms actively combat generic content that doesn't add unique value. When training data includes your content alongside thousands of similar articles, originality determines citation likelihood.

Mass Auto-Generated Pages - Programmatic page generation at scale worked when algorithms couldn't assess content quality individually. LLMs evaluate each response for "information gain" - did you say something unique that warrants citation? Generic pages generated from templates fail this test.

Link Schemes - While high-quality backlinks remain crucial for authority building, manipulative link schemes (PBNs, paid links without disclosure, reciprocal linking networks) get detected and discounted by both search engines and AI platforms analyzing link graph patterns.

⚠️ Why Agencies Remain Stuck in 2015 SEO Mindset

Traditional SEO agencies struggle to adapt because their business models, toolsets, and expertise center on outdated optimization paradigms. Tool obsession replaces strategic thinking - focusing on Core Web Vitals optimization while ignoring entity associations, treating AEO as "SEO with AI keywords" rather than recognizing the fundamental shift required.

"Stay heavily focused on traditional SEO - that's your foundation that should not change despite the AI evolution."

u/GrowthHacking, r/GrowthHacking Reddit Thread

This advice reflects the problem: while traditional SEO remains important, treating it as unchanging ignores how AI platforms fundamentally alter what "optimization" means. Agencies applying Google-only playbooks to multi-platform AI search miss that:

- ChatGPT favors conversational depth - Long-form, nuanced content outperforms keyword-optimized snippets

- Perplexity prioritizes academic citations - Source diversity and verification matter more than traditional authority signals

- Claude emphasizes recency and accuracy - Outdated content gets filtered regardless of SEO strength

- Google AI Overviews blend signals - Featured snippet structure + brand authority + user engagement

The inability to adapt across platforms simultaneously reveals agencies' single-channel expertise hitting its limits.

🌪️ Algorithm Volatility and the Unpredictability Problem

Traditional SEO operated in a relatively transparent environment - Google published guidelines, communicated major updates, and provided Search Console data for troubleshooting. AI platforms offer none of this stability. The same question asked in ChatGPT yields different answers each time ("Track By Run" volatility), making consistent optimization nearly impossible.

Algorithm unpredictability manifests as:

- No public guidelines - Unlike Google's documented ranking factors, AI platforms don't explain citation logic

- Constant platform updates - Models retrain regularly without announcement or recourse for affected sites

- Personalized results - User history, location, and context influence citations, eliminating universal benchmarks

- Cross-platform divergence - Strategies working for ChatGPT may fail for Perplexity or Claude

"The truth is that AEO is so new, companies are still figuring out best practices."

u/DigitalMarketing, r/DigitalMarketing Reddit Thread

This volatility exposes tactical optimization's fundamental weakness: when tactics optimize for specific algorithms that change without notice, the work becomes worthless overnight. Strategic optimization - building genuine authority, topical expertise, and trust signals - survives platform changes because it targets universal principles rather than exploiting temporary algorithm quirks.

✅ MaximusLabs' AI-Native Strategic Approach

MaximusLabs recognizes LLMs as fundamentally different engines requiring strategy over tactics. Our AI-native optimization approach focuses on earned AEO through strategic citation acquisition from high-authority third-party sources - Reddit discussions, G2 reviews, industry listicles, expert roundups, news mentions - that AI platforms reference regardless of algorithm changes.

Core Strategic Elements:

Topical Authority Building - Becoming the definitive expert in narrow niches rather than spreading efforts across broad categories. When AI platforms need authoritative sources on specific subjects, specialized expertise wins over generalist content.

Information Gain Optimization - Every piece of content must answer "did you say something unique?" Original research, proprietary data, first-hand experience, and contrarian perspectives create citability that generic content lacks.

Fluency + Statistics Addition - Research shows combining multiple GEO methods outperforms single tactics. Fluency optimization (natural, conversational writing) combined with statistics addition (data-backed claims) ranks among the best-performing approaches, boosting visibility up to 40%.

Universal Trust Signals - Rather than chasing platform-specific tactics, we build trust foundations that work everywhere: genuine E-E-A-T implementation, quality backlinks from authoritative domains, verified entity associations, consistent cross-platform presence, and community validation.

Search Everywhere Optimization - Extending beyond LLMs to YouTube, Reddit, TikTok, LinkedIn, and emerging platforms where buyers actually search. This diversification mitigates algorithm volatility risk - when one platform changes, others maintain visibility.

📊 Why Future-Proof Strategies Win

"If you do AEO you're just talking about the LLM. But if you're talking about search everywhere you're talking about YouTube, Reddit, all these other things."

- Expert Quote

GEO methods can boost visibility by up to 40% in AI responses, with quotation addition and statistics addition as top individual performers. Combining methods (fluency optimization + statistics) outperforms any single tactic. But the real insight lies in why these methods work: they create genuine value that survives algorithm changes.

Future-proof strategies focus on fundamentals - trust, authority, uniqueness - that transcend platform specifics. When ChatGPT updates its model or Perplexity adjusts citation logic, sites built on authentic expertise maintain visibility while tactical optimizers scramble to reverse-engineer new algorithm quirks. Stop manipulating algorithms. Start building the authority that makes AI platforms compete to cite you. That's the only sustainable path forward with our GEO strategy framework.

Q7. Multi-Platform Complexity: Optimizing for ChatGPT, Perplexity, and Google Simultaneously [toc=Multi-Platform Optimization]

The multi-engine reality defines modern search: ChatGPT traffic surged 80% since April, Perplexity serves millions of queries monthly, Claude grows steadily, Google AI Overviews dominate traditional SERPs, and Bing Copilot captures enterprise users. Each platform operates with distinct algorithms, citation mechanisms, and content preferences - unlike Google's relatively static results where optimization principles remained consistent for years. This platform diversity creates resource allocation nightmares and strategic complexity that traditional agencies aren't equipped to handle.

🔄 Platform-Specific Requirements and Citation Mechanisms

Each AI engine prioritizes different content characteristics and citation styles, demanding tailored optimization approaches:

ChatGPT Optimization - Favors conversational depth, recent information, and natural language patterns. Citations appear as inline references with occasional source links. Content structured as dialogue or Q&A format performs well. Recency bias means constantly updating content maintains visibility.

Perplexity Optimization - Prioritizes academic rigor, source diversity, and citation density. Frequently provides numbered references and source lists. Content with embedded citations to authoritative sources, data-backed claims, and technical depth earns prominent placement. Behaves more like academic search than conversational AI.

Google AI Overviews - Emphasizes featured snippet structure, brand authority, and user engagement signals. Combines traditional SEO factors with generative capabilities. Content optimized for featured snippets (clear answers, structured format, concise explanations) transitions well to AI Overviews.

Claude Optimization - Focuses on accuracy, context understanding, and nuanced responses. Less prone to hallucinations than competitors, requiring higher factual precision. Detailed, well-researched content with clear sourcing performs best.

"The biggest distinction being how LLMs favor long-tails since they are more conversational and more backlinks for more authority as well."

u/SEO, r/SEO Reddit Thread

These platform differences create optimization conflicts - content structured for ChatGPT's conversational style may lack the citation density Perplexity prefers, while Perplexity-optimized academic content feels stiff for Google AI Overviews' snippet-focused extraction.

❌ Why Single-Platform Optimization Misses 80% of Opportunity

Traditional SEO agencies remain Google-obsessed, applying two decades of Google-specific expertise while ignoring the 80% of AI search volume occurring elsewhere. This myopia stems from lack of multi-platform testing infrastructure, inability to prioritize platforms based on ICP behavior, and treating all LLMs identically despite their distinct requirements.

"Any tool claiming to monitor your AI visibility at this stage is, in my professional opinion, misleading at best and absolute snake oil at worst."

u/AskMarketing, r/AskMarketing Reddit Thread

The resource allocation challenge compounds platform complexity. Covering ChatGPT, Perplexity, Claude, Google AI Overviews, and Bing Copilot simultaneously requires 5x the testing effort compared to Google-only SEO. Agencies spread resources too thin - monitoring all platforms superficially rather than strategically focusing where their ICP actually searches - or concentrate only on Google while missing high-intent buyers using AI-first search.

Competitive landscapes differ dramatically across platforms. You might dominate ChatGPT citations while remaining invisible on Perplexity for the same queries. Without platform-specific tracking, you can't identify these gaps or capitalize on platform-specific opportunities.

🎯 Strategic Platform Prioritization Framework

Rather than spray-and-pray optimization across all platforms equally, strategic prioritization requires understanding where your Ideal Customer Profile (ICP) actually searches:

B2B SaaS Buyers - Heavily use ChatGPT for research, LinkedIn for social proof, Reddit for authentic reviews, YouTube for product demonstrations

E-commerce Shoppers - Perplexity for product comparisons, Google AI Overviews for quick answers, TikTok for discovery, Reddit for recommendations

Enterprise Decision-Makers - Bing Copilot (integrated with Microsoft ecosystem), Google AI Overviews, industry-specific platforms

Technical Audiences - Claude for detailed analysis, Stack Overflow for problem-solving, GitHub for code examples, specialized forums

✅ MaximusLabs' Search Everywhere Optimization Methodology

MaximusLabs solves multi-platform complexity through Search Everywhere Optimization - a methodology covering ChatGPT, Perplexity, Reddit, YouTube, TikTok, LinkedIn, and emerging platforms, not just Google. Our approach combines strategic prioritization with universal trust signals that work across all engines.

Multi-Platform Testing Infrastructure - Standardized evaluation criteria measuring citation frequency, positioning, context quality, and competitive benchmarking across platforms simultaneously. This infrastructure reveals platform-specific opportunities traditional agencies miss.

ICP-Based Prioritization - Rather than optimizing all platforms equally, we analyze where your buyers actually search and concentrate resources accordingly. A developer tools company might prioritize GitHub and Stack Overflow over TikTok, while a consumer brand reverses this focus.

Universal Trust Signal Focus - Instead of chasing platform-specific tactics that change frequently, we build foundations that work everywhere: E-E-A-T implementation, quality backlinks from authoritative domains, entity associations with recognized authorities, topical authority in narrow niches, and consistent cross-platform presence.

Strategic Resource Allocation - Balancing quick wins (platforms where you can gain visibility rapidly) with long-term authority building (domains requiring sustained effort). This prevents the spray-and-pray trap while ensuring no critical platform gets neglected.

🌐 Blue Ocean Market Expansion

"If you do AEO you're just talking about the LLM. But if you're talking about search everywhere you're talking about YouTube, Reddit, all these other things."

- Expert Quote

This quote captures the strategic insight: AI search isn't replacing Google in zero-sum competition - it's expanding the total addressable market for search visibility. The pie grows larger, creating blue ocean opportunities rather than red ocean competition. This expansion mitigates platform risk: when search diversifies across 5-7 platforms instead of Google monopoly, no single platform controls your destiny.

The platform prioritization matrix varies by industry and ICP, but the principle remains universal: optimize where your buyers search, not where your agency has expertise. That requires multi-platform capabilities traditional agencies can't deliver. MaximusLabs provides that infrastructure with competitive GEO analysis, turning platform complexity from overwhelming challenge into competitive advantage.

Q8. The Content Simplification Paradox: Balancing Conciseness with Authority [toc=Content Simplification Paradox]

AI platforms prefer extractable content - clear answers, simple language, structured format - that LLMs can parse and reproduce accurately. Yet oversimplifying content risks losing depth, nuance, and authority that builds trust with both human readers and algorithmic evaluators. This tension creates the content simplification paradox: how do you make content concise enough for AI extraction while maintaining the comprehensiveness that signals genuine expertise?

📝 Why AI Platforms Favor Concise, Extractable Content

Large Language Models excel at extracting structured information from well-organized content. When generating responses, LLMs prioritize sources offering clear, direct answers over lengthy, meandering explanations. Technical factors driving this preference include:

Parsing Efficiency - LLMs process content sequentially. Concise answers appearing early in content have higher extraction likelihood than insights buried in paragraph 15.

Context Window Limitations - AI models operate with finite context windows (the amount of text they can consider simultaneously). Concise content fits entirely within context windows, while lengthy articles may get truncated.

Answer Confidence - When multiple sources provide similar information, LLMs cite sources expressing answers most clearly and definitively. Hedging language and excessive qualifications reduce citation probability.

"Structure your content so AI can easily pull from it. Use clear headings that are actual questions. Add FAQ sections. Make lists and tables. Keep paragraphs short."

u/SEO, r/SEO Reddit Thread

This guidance reflects real technical constraints. AI crawlers extracting content for knowledge bases prefer semantic HTML with descriptive headings, short paragraphs (2-4 sentences), bulleted/numbered lists, comparison tables, and FAQ-structured Q&A pairs.

⚠️ The Risks of Oversimplification

Simplifying content for AI extraction carries genuine downsides that can undermine authority and trust:

Loss of Nuance - Complex topics require nuanced explanations acknowledging edge cases, exceptions, and contextual dependencies. Oversimplification can misrepresent reality, damaging credibility when readers encounter real-world complexity.

Authority Signals Diminished - Comprehensive, detailed content demonstrates deep expertise. Surface-level summaries may get extracted more easily but signal less authority to both human readers and algorithmic trust evaluators.

Competitive Differentiation Lost - When everyone optimizes for the same concise format, content becomes commoditized. Depth and unique perspectives provide differentiation that generic summaries lack.

E-E-A-T Implications - Google's E-E-A-T framework emphasizes first-hand experience and demonstrated expertise. Brief, simplified content often lacks the detail proving genuine experience.

"To me, it feels like AEO is nearly completely incapable of seeing context."

u/DigitalMarketing, r/DigitalMarketing Reddit Thread

This observation highlights a critical limitation: AI platforms struggle with context, nuance, and satire. Content requiring contextual understanding may get misinterpreted or ignored entirely, creating incentives for oversimplification that can sacrifice accuracy.

✅ Strategic Balance: The Inverted Pyramid + Deep Dive Approach

The optimal solution combines extractable summaries with comprehensive depth through inverted pyramid content structure:

Layer 1: Direct Answer (50-100 words) - Immediately after the H2 heading, provide a concise, definitive answer to the question. This satisfies AI extraction needs and serves as potential featured snippet content.

Layer 2: Core Explanation (200-300 words) - Expand the direct answer with essential context, key considerations, and primary factors. Use clear headings, short paragraphs, and bulleted lists for maximum extractability.

Layer 3: Deep Dive (400+ words) - Provide comprehensive analysis with nuance, edge cases, examples, data, and expert insights. This layer demonstrates genuine expertise and authority while serving readers seeking depth.

Layer 4: Practical Application - Conclude with actionable steps, implementation guidance, or decision frameworks. This provides tangible value beyond information alone.

This structure lets AI platforms extract Layer 1-2 for concise answers while human readers and authority evaluators appreciate Layer 3-4 depth. Both needs get satisfied without compromise.

🎯 Content Format Optimization for AI Extractability

Beyond structure, specific content formats improve AI extractability while maintaining depth:

FAQ Sections - Individual FAQ items structured with Question (H3) + Answer (paragraph) format. "FAQs are better off individually - check out Edward Sturm's SEO Playbook for this".

Comparison Tables - Presenting alternatives side-by-side in table format. AI platforms extract tabular data effectively while human readers appreciate quick comparison capability.

Step-by-Step Processes - Numbered lists with clear action items. Both AI extraction and human comprehension benefit from sequential structure.

Definition Lists - Term + definition pairs for technical concepts. Establishes terminology while remaining extractable.

Visual Hierarchy - Descriptive H3/H4 subheadings functioning as standalone statements. Readers skimming content and AI platforms parsing structure both benefit.

💡 How MaximusLabs Balances Extractability with Authority

MaximusLabs eliminates the simplification paradox through our layered content methodology. Every piece we create follows the inverted pyramid structure - direct answers for AI extraction combined with comprehensive depth demonstrating genuine expertise. Our SME integration ensures depth comes from authentic experience rather than surface-level research, satisfying both E-E-A-T requirements and AI extractability needs. This approach prevents the commoditization trap where oversimplified content blends into generic AI slop, instead creating remarkable differentiation through unique insights while maintaining the structural clarity AI platforms require for confident citation.

Q9. Timeline and Resource Realities: Why AEO Takes 6-12 Months (And What That Means) [toc=Timeline and Resources]

The timeline shock hits every executive the same way: "Six to twelve months? SEO only takes three months!" This expectation mismatch kills more AEO initiatives than any technical challenge. The reality: Answer Engine Optimization requires significantly longer timelines than traditional SEO because trust signal accumulation - backlinks, entity associations, E-E-A-T validation - doesn't happen overnight, and LLM knowledge cutoffs mean new content isn't immediately indexed across platforms.

Resource intensity compounds the timeline challenge. Traditional SEO could function with one content writer and an agency managing technical optimization. AEO demands SME involvement in every content workflow, multi-platform optimization expertise, manual tracking across AI engines, and verification protocols preventing algorithmic penalties. The operational overhead increases 2-3x compared to SEO-only strategies.

⏰ Why AEO Takes Longer Than Traditional SEO

Trust signal accumulation operates on different timelines across platforms. Google might index new content within days, but achieving consistent ChatGPT citations requires weeks to months of validation through third-party sources. Perplexity prioritizes established authorities with citation histories. Claude emphasizes accuracy verification through cross-referencing. Each platform's algorithm learning curve varies unpredictably.

"The truth is that AEO is so new, companies are still figuring out best practices."

u/DigitalMarketing, r/DigitalMarketing Reddit Thread

Timeline Factors Extending AEO Implementation:

- Entity association building requires third-party validation (industry mentions, press coverage, review platforms) that you can't directly control

- Manual tracking cycles replace automated SEO dashboards, slowing optimization iteration speed

- Content quality standards demand SME involvement adding 2-4 weeks per piece versus AI-generated drafts

- Platform-specific testing across ChatGPT, Perplexity, Claude, Google AI Overviews multiplies effort 4-5x

- LLM knowledge cutoffs create lag between publication and indexing that varies by platform

❌ Why Agencies Overpromise and Underdeliver

Traditional SEO agencies overpromise quick wins to close deals - "results in 30 days" remains a common pitch - leading to client churn when reality hits. This stems from applying SEO timelines (3-6 months) to fundamentally different AEO dynamics, underestimating resource requirements causing scope creep, and lacking internal buy-in frameworks that cause initiatives to die after 90 days when executives don't see immediate ROI.

"Some tools, while extremely expensive, were not really worth it or the ROI was not justifiable internally."

u/GrowthHacking, r/GrowthHacking Reddit Thread

The agency business model incentivizes this: longer timelines mean harder sales cycles. Promising 6-12 month timelines upfront requires education, stakeholder management, and confidence that competitors won't undercut with unrealistic promises. Most agencies choose the easier path: overpromise, deliver partial results, blame "algorithm changes," and move to the next client.

Treating AEO as a side project versus strategic initiative compounds failure. When marketing teams lack dedicated resources and executives view AEO as "experimental," the initiative gets deprioritized at the first sign of slow progress. Without protected budget, committed personnel, and leadership understanding the timeline reality, AEO becomes another failed pilot program.

💰 Resource Requirements by Company Stage

These requirements shock teams accustomed to SEO's lower barriers. SME involvement is mandatory - generic AI content fails E-E-A-T requirements immediately. Multi-platform optimization demands specialized expertise unavailable in traditional agencies. Manual tracking requires analyst time that automated SEO tools eliminated.

✅ MaximusLabs' Transparent Timeline Framework

.png)

MaximusLabs sets realistic 6-12 month expectations upfront through our Transparent Timeline Framework, eliminating surprise churn from misaligned expectations:

Phase 1 (Months 1-3): Foundation Building - Technical audit and optimization, E-E-A-T infrastructure implementation, entity association groundwork, initial content production with SME integration. Leading indicators: schema deployment, author profile completion, first external citations.

Phase 2 (Months 4-6): Citation Acquisition - Earned AEO strategy execution (Reddit outreach, G2 optimization, industry listicle placements), multi-platform content optimization, brand mention tracking infrastructure, post-conversion survey implementation. Leading indicators: first AI citations, brand mention frequency increases, third-party validation growth.

Phase 3 (Months 7-9): Optimization and Scale - Citation quality improvement, competitive displacement strategies, platform-specific refinement, content velocity increases. Lagging indicators emerge: citation position improvements, Share of Answers growth, pipeline influence signals.

Phase 4 (Months 10-12): Business Impact Validation - Revenue attribution modeling, ROI justification frameworks, stakeholder reporting, long-term strategy adjustment. Business metrics: attributable pipeline, citation-influenced deals, brand authority lift.

🎯 Internal Buy-In Playbook for Stakeholder Management

Securing executive support requires addressing each stakeholder's specific concerns:

CFO Buy-In (ROI Proof) - Present leading indicators (citation frequency, brand mention growth) as predictive metrics before lagging indicators (traffic, pipeline) materialize. Use LLM traffic 6x conversion advantage to justify lower volume tolerance. Compare AEO to brand marketing (measured by influence, not clicks).

CEO Priorities (Resource Allocation) - Position AEO as defensive necessity ("25% search decline by 2026" projection) not offensive opportunity. Frame investment as protecting existing SEO gains from AI disruption. Show competitor citation analysis revealing visibility gaps.

Marketing Team Capacity (Bandwidth) - Phase investment approach prevents overwhelming teams. Start with high-value BOFU content, expand gradually. Leverage agency partnerships for specialized work (technical implementation, multi-platform testing) freeing internal teams for strategy and SME coordination.

Sales Alignment (Attribution Education) - Train sales on asking "How did you hear about us?" with AI platform options. Share deal influence examples from post-conversion surveys. Connect citation visibility to sales-qualified lead quality improvements.

"Stay heavily focused on traditional SEO - that's your foundation that should not change despite the AI evolution."

u/GrowthHacking, r/GrowthHacking Reddit Thread

This advice reflects the portfolio approach MaximusLabs advocates: AEO complements SEO rather than replacing it. The 6-12 month investment protects and extends existing SEO foundations as AI platforms grow.

Case evidence validates the timeline: companies rushing implementation without proper resources fail after 3-4 months when quick wins don't materialize. Companies with proper timeline and resource planning show 40% visibility improvement at month 9 - but only with sustained investment through the "trust accumulation valley" where leading indicators rise but business metrics lag. MaximusLabs' framework helps teams survive that valley by educating stakeholders on expected patterns, preventing premature abandonment of otherwise successful strategies. Learn more about our GEO approach.

Q10. Strategic Risk: Building on 'Rented Land' You Don't Control [toc=Platform Dependency Risks]

Platform dependency defines the hidden strategic risk of Answer Engine Optimization: you're optimizing for platforms - ChatGPT, Perplexity, Claude - controlled by third parties who can change algorithms overnight without notice, transparency, or recourse. Unlike Google Search Console providing appeal mechanisms and gradual update rollouts, AI platforms operate as black boxes with no public guidelines, no feedback loops, and no accountability when changes destroy months of optimization work.

The "rented land" problem that plagued social media marketing in the 2010s amplifies in the AI era. When Facebook changed its algorithm in 2018, organic reach for business pages dropped 90% overnight - brands that built entire marketing strategies on Facebook distribution lost their primary channel instantly. AEO carries identical risks with even less transparency.

.png)

⚠️ Real Platform Dependency Risks

Algorithm volatility manifests unpredictably. ChatGPT's model updates occur without announcement - one day your brand dominates citations, the next day you're invisible. Perplexity adjusts source diversity requirements based on query patterns. Claude emphasizes different trust signals across updates. Google AI Overviews blend traditional SEO with generative factors that shift as the product evolves.

"There aren't any confirmed AEO tactics, LLMs and Google haven't shared their exact algorithms."

u/AgenticSEO, r/AgenticSEO Reddit Thread

This opacity creates existential strategic risk: if OpenAI changes ChatGPT's citation logic tomorrow, months of optimization become worthless overnight. You have no recourse, no appeal process, no transparency into what changed or how to adapt. The platform controls your distribution completely.

Platform consolidation risk compounds dependency. Google has killed successful products before (Google Reader, Google+, dozens of others) despite user protests. What happens if Perplexity gets acquired and citation policies change? If Claude pivots to enterprise-only? If ChatGPT introduces paid placement for citations? You built on land you don't own, and the landlord holds all leverage.

Competitive disadvantage accelerates when you don't control your primary channel. Brands relying heavily on AEO face strategic vulnerability if platforms prioritize competitors, change citation mechanisms to favor certain content types, or introduce features that diminish brand visibility. Portfolio diversification becomes mandatory risk mitigation.

❌ Why Short-Term Agency Thinking Amplifies Risk

Traditional SEO agencies focus on quick wins in new platforms without risk mitigation strategies, demonstrating short-term thinking that leaves clients exposed. Over-investment in single platforms - "ChatGPT-only optimization" - creates single points of failure. Treating AEO as SEO replacement rather than complement abandons the one channel (owned website, traditional search) where you control distribution.

"Any tool claiming to monitor your AI visibility at this stage is, in my professional opinion, misleading at best and absolute snake oil at worst."

u/AskMarketing, r/AskMarketing Reddit Thread

Agencies lack portfolio approaches balancing owned, earned, and platform-dependent channels. Their business model incentivizes selling new services (AEO tracking, optimization) rather than strategic diversification that might reduce their billable scope. This misalignment between agency interests and client risk management creates long-term vulnerability.

The historical pattern repeats: agencies chase new platforms aggressively during hype cycles, then abandon them when platforms commoditize or regulations emerge. Remember when every agency offered Facebook marketing services in 2014? How many sustained that focus post-algorithm change? AEO faces identical trajectory without strategic risk management.

🌐 Balancing AEO with Owned Channel Development

Smart AEO investment requires simultaneous owned asset development:

Email List Building - Citations drive awareness, email captures intent. Every AI-exposed prospect should encounter email capture mechanisms on-site, converting zero-click visibility into owned communication channels.

Community Platform Development - Reddit presence, LinkedIn thought leadership, industry forum participation - owned audiences you control independent of platform algorithm changes.

Direct Traffic Cultivation - Brand building through AI citations should drive direct website visits over time. Track direct traffic lift as AEO success metric alongside citation frequency.

Proprietary Data Assets - Original research, unique datasets, first-party insights that AI platforms reference but can't replicate without attribution - creating sustainable competitive moats.

Balancing AEO investment with owned channels creates portfolio resilience: no single point of failure, multiple traffic sources, and reduced platform dependency risk.

✅ MaximusLabs' Portfolio Approach and Future-Proofing

MaximusLabs solves platform dependency through portfolio diversification and universal trust signal focus:

No Single Point of Failure - Strategic mix of AEO (AI platform citations), SEO (traditional search), owned channels (email, community), and earned media (PR, third-party mentions). When one channel experiences disruption, others maintain visibility and pipeline flow.

Earned AEO Strategy - Rather than optimizing directly for LLMs (high platform risk), we prioritize citations from authority sites - Reddit discussions, G2 reviews, industry publications, expert roundups. When AI platforms cite these sources, you get visibility regardless of LLM algorithm changes. The citation comes from Reddit (which you influenced), not from direct LLM optimization.

Universal Trust Signals - Building foundations that work across all platforms: genuine E-E-A-T implementation, quality backlinks from authoritative domains, verified entity associations, topical authority in narrow niches. These transcend individual platform changes because they represent actual authority rather than algorithm manipulation.

Owned Asset Development - Integrating thought leadership content, proprietary research publication, community building, and email list growth into AEO strategy. Every citation should drive owned channel growth, not just platform-dependent visibility.

🔄 Historical Parallel: Blue Ocean Strategy

"If you do AEO you're just talking about the LLM. But if you're talking about search everywhere you're talking about YouTube, Reddit, all these other things."

- Expert Quote

This quote captures the strategic insight: ChatGPT isn't replacing Google in zero-sum competition - it's expanding the total addressable market for search visibility. The pie grows larger, creating blue ocean opportunities rather than red ocean competition. This expansion mitigates platform risk: when search diversifies across 5-7 platforms instead of Google monopoly, no single platform controls your destiny.

The multibillion-dollar businesses in this space won't be tracking tools (easily commoditized) but execution partners who navigate platform risk intelligently through diversification, earned strategies, and owned asset development. MaximusLabs provides that strategic partnership, treating AEO as one component of comprehensive search everywhere presence rather than risky over-dependence on platforms you don't control. Stop building castles on rented land. Start building distributed authority across the entire web with B2B SEO strategies.

Q11. Should You Even Invest in AEO? A Decision Framework for B2B SaaS [toc=AEO Investment Decision]

Not every company should invest in Answer Engine Optimization today. While AI search represents the future, resource constraints, business stage, industry characteristics, and buyer behavior patterns determine whether AEO makes strategic sense versus remaining focused on traditional SEO foundations. This framework helps B2B SaaS leaders make informed decisions based on specific circumstances rather than following hype.

✅ When AEO Makes Strategic Sense

Scenario 1: Your ICP Uses AI Search Heavily - If target buyers research using ChatGPT, Perplexity, or Google AI Overviews frequently, AEO becomes mandatory. Developer tools, technical B2B products, and knowledge-work solutions see high AI search adoption. Survey your buyers: "How do you research solutions?" If 30%+ mention AI platforms, prioritize AEO.

Scenario 2: Competitors Are Gaining AI Visibility - Competitive analysis reveals rivals dominating AI citations for key buying queries. Waiting allows competitors to establish authority that becomes harder to displace over time. Early-stage companies benefit from earned AEO strategies (Reddit mentions, G2 reviews) that bypass traditional domain authority requirements.

Scenario 3: Long Sales Cycles Justify Influence Metrics - Enterprise B2B with 6-12 month sales cycles can absorb AEO's attribution ambiguity. When deals involve multiple touchpoints across research platforms, citation influence becomes measurable through post-conversion surveys and pipeline analysis.

Scenario 4: Strong Existing Content Foundation - Companies with comprehensive help centers, technical documentation, and thought leadership content can optimize existing assets for AI extractability faster than creating from scratch. The marginal investment adds AEO benefits to existing SEO investments.

Scenario 5: Differentiated Expertise in Narrow Niches - Topical authority in specialized subjects (e.g., "API rate limiting for SaaS applications") performs better than broad category optimization. Companies with genuine specialized expertise can achieve citations faster than generalist competitors.

⚠️ When to Wait on AEO Investment

Scenario A: Pre-Product-Market Fit Startups - Before validating core product-market fit, focus resources on customer development and product iteration. AEO's 6-12 month timeline doesn't align with pre-PMF urgency. Exception: if your ICP lives on Reddit/community platforms, earned AEO (community presence) doubles as customer development.

Scenario B: Limited Marketing Resources (< 2 FTEs) - AEO demands minimum 1-2 dedicated resources for meaningful execution. Small teams should master traditional SEO foundations first - technical optimization, content creation, link building - before adding multi-platform complexity.

"Stay heavily focused on traditional SEO - that's your foundation that should not change despite the AI evolution."

u/GrowthHacking, r/GrowthHacking Reddit Thread

Scenario C: Weak Domain Authority (< 20 DA) - While earned AEO can bypass domain authority limitations, companies with weak technical SEO foundations should prioritize core optimization before advanced tactics. Fix crawlability, site speed, and content quality issues first.

Scenario D: Buyer Personas Show Low AI Adoption - Industries with traditional buyer behavior (construction, manufacturing, certain healthcare segments) show slower AI search adoption. Survey data revealing < 15% AI platform usage suggests delaying AEO investment until adoption increases.

Scenario E: No SME Access for Content - AEO requires authentic Subject Matter Expert involvement - generic AI content fails immediately. Without committed SME participation (founders, technical leads, customer-facing teams), proper E-E-A-T implementation becomes impossible.

📊 Decision Matrix: Evaluating AEO Readiness

💡 Phased Investment Approach for Mid-Stage Companies

Companies in the yellow zone benefit from phased AEO pilots:

Phase 1: Low-Cost Foundation (Months 1-3, $5K-10K investment) - Optimize existing content for AI extractability, implement basic schema markup, create FAQ sections, add author profiles with credentials, start manual citation monitoring.

Phase 2: Earned AEO Testing (Months 4-6, $10K-20K investment) - Focus on Reddit community presence, G2 review optimization, industry listicle outreach, help center expansion. Measure citation frequency growth and brand mention increases.