Q1. What Is AEO Keyword Research and Why Traditional SEO Keyword Research Fails in AI Search? [toc=AEO vs Traditional SEO]

AEO (Answer Engine Optimization) keyword research is the strategic process of identifying question clusters and conversational queries that AI answer engines like ChatGPT, Perplexity, Google AI Overviews, Gemini, and Grok use to surface brand citations and zero-click mentions. Unlike traditional SEO's focus on short-tail keywords with measurable search volume, AEO keyword research targets the nuanced, long-form questions (often 15-25 words) that users pose to AI platforms when seeking detailed answers, product recommendations, or implementation guidance.

The fundamental shift: AI search has moved from anticipating solitary keywords to mastering clusters of conversational questions where a single landing page can and should target hundreds of related queries within a topic.

"People ask AI tools completely different questions than they search on Google. Think conversational, like 'What's the best coffee shop near me' instead of 'coffee shop downtown.'" r/SEO, Reddit Thread

Why Traditional SEO Keyword Research Fails for AI Search

Traditional SEO agencies rely on a methodology built for a Google-dominated world: mine Google Keyword Planner for volume data, target exact-match keywords, optimize for rankable terms with quantifiable monthly searches. This playbook fails catastrophically for AEO due to a simple reality: there is no equivalent API or volume data set for ChatGPT, Perplexity, or other LLMs.

The core problem: Traditional SEO treats keywords as isolated search terms measurable through Google Ads API. But AI platforms operate fundamentally differently. When a user asks ChatGPT a complex question like "Which project management software integrates with Slack for small teams under 50 people?", the LLM doesn't simply match keywords. It performs multiple grounding searches (concise, internal queries the bot runs in the background) to retrieve information from authoritative sources.

Traditional agencies lack the framework to identify these grounding searches because:

- No volume truth set exists for AI platforms: you cannot see monthly search volume for ChatGPT queries

- Keyword length differs dramatically: AI queries average 15-25 words vs. Google's 3-5 word searches

- Intent mapping breaks down: exact-match keywords don't capture conversational, multi-part questions

- Measurement metrics diverge: success isn't clicks; it's citations and zero-click mentions

"Semrush feels like the most complete package for keyword research, audits, and tracking shifts in search including AI and GEO stuff." r/SEO, Reddit Thread

Yet even advanced tools like Semrush face limitations: they report Google data, not ChatGPT intent patterns.

The AI-Era Transformation: Grounding Searches Replace Keywords

AI answer engines function as universal intent decoders. A user might ask: "How do I optimize my SaaS pricing page to convert free trial users with less than 100 employees who need SOC 2 compliance?" The LLM translates this into several grounding searches:

- "SaaS pricing page optimization best practices"

- "Converting free trial users strategies"

- "SOC 2 compliance requirements for small businesses"

AEO keyword research must identify these internal grounding queries, the precise phrases AI platforms use to retrieve content, rather than surface-level user prompts. This requires understanding:

✅ Question fan-out patterns: How one customer query expands into multiple sub-questions

✅ Intent clustering: Grouping hundreds of related questions around a single topic

✅ BOFU prioritization: Targeting conversion-focused questions over informational queries

The strategic implication is profound: optimizing for "project management software" (a traditional SEO approach) won't capture "Which PM tools offer two-way Slack sync for distributed teams?" (an AEO opportunity).

MaximusLabs AI's Intent-to-Revenue Methodology

MaximusLabs AI addresses the AEO volume delusion, the absence of reliable question volume metrics, through a proprietary validation framework that eliminates dependence on unavailable data while prioritizing conversion potential:

1. Question Fan-Out Analysis: Mapping how single customer prompts expand into multiple grounding searches by analyzing actual sales call transcripts and support tickets to identify the sub-questions prospects ask before purchasing.

2. Competitor Paid Search Intelligence: Using competitor Google Ads campaigns as a proxy for commercial intent. If competitors pay for a keyword, it converts. This validates bottom-funnel question clusters worth targeting.

3. Customer Conversation Mining: Extracting high-intent questions from internal data sources:

- Sales call recordings (what prospects ask during demos)

- Support ticket history (post-purchase implementation questions)

- Community forums and Reddit threads (unfiltered customer pain points)

4. Conversion Potential Scoring: Prioritizing questions based on:

- Query specificity (longer, detailed questions signal higher intent)

- Funnel stage (feature/integration questions convert better than "What is..." queries)

- Implementation focus (tactical "how-to" questions indicate near-purchase behavior)

This approach reframes keyword research from volume estimation (impossible for AI platforms) to intent validation (achievable through proxy data and customer intelligence).

Why the Strategic Pivot Matters: The LLM Traffic Quality Advantage

ChatGPT has grown to over 400 million weekly users, with 60-70% of its answers relying on web retrieval. AI-referred traffic quality differs dramatically from traditional search traffic.

Webflow reported a 6x conversion rate difference for LLM-referred traffic compared to Google search visitors. Why? AI users arrive highly qualified. They've already received a detailed answer that mentioned your brand as a recommended solution. By the time they reach your site, they're evaluating fit, not discovering options.

This creates the "agentic funnel" phenomenon: AI search engines become step one in the modern B2B buyer journey. Ideal Customer Profiles (ICPs) ask AI to conduct competitive analysis, surface product comparisons, and gather feature details before ever visiting a website.

"Users coming from AI tools already trust the brand before they even land on the site."

r/SEO, Reddit Thread

Traditional SEO optimization chases clicks. AEO optimization drives pre-qualified revenue, targeting the questions that high-intent buyers ask AI platforms during their research phase.

For VP Marketing and Growth leaders facing declining Google traffic and rising customer acquisition costs, AEO keyword research represents a strategic shift: from optimizing for search volume metrics to capturing the conversational questions that drive actual conversions in an AI-first search landscape.

Q2. How Do AEO Keywords Differ From Traditional SEO Keywords? [toc=Key Differences]

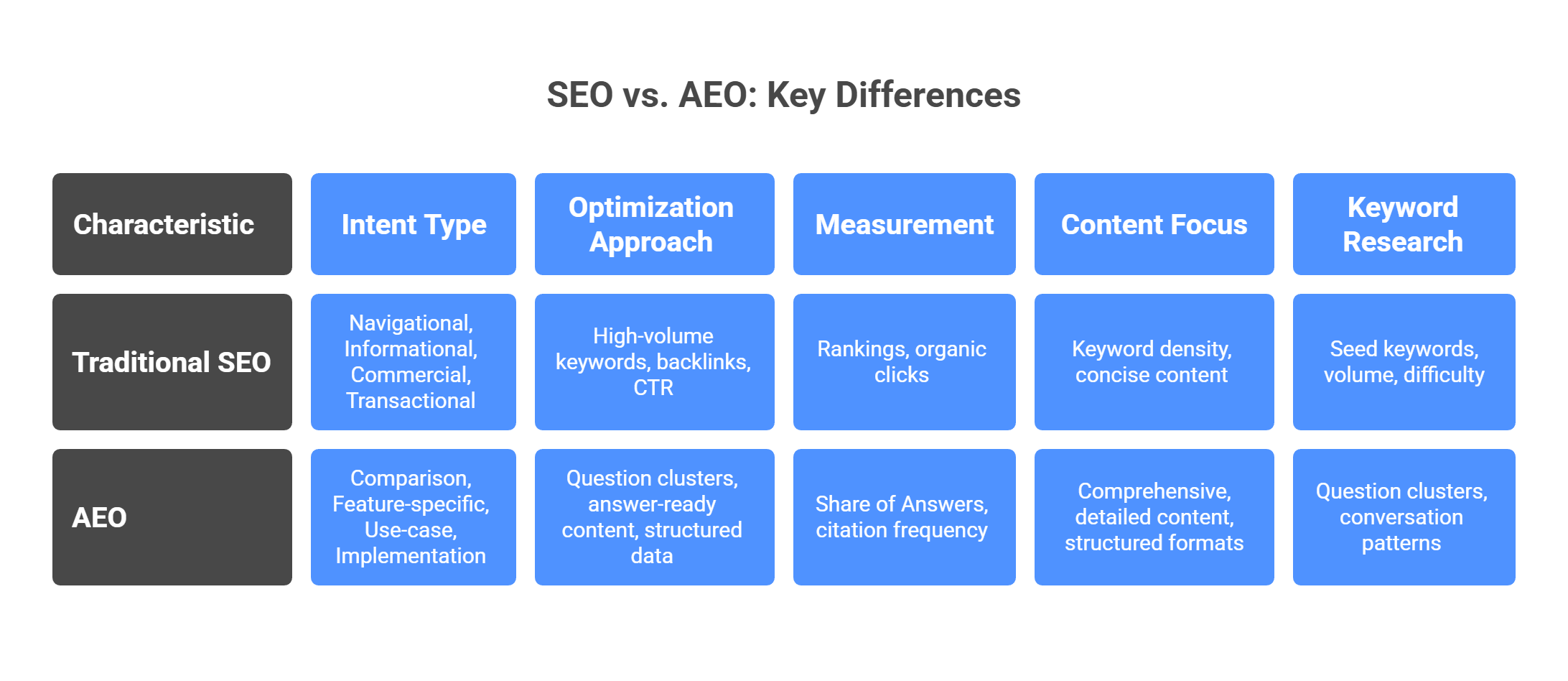

AEO keywords and traditional SEO keywords serve fundamentally different search ecosystems, requiring distinct research methodologies, content strategies, and measurement frameworks. Understanding these differences is critical for allocating resources effectively as search traffic shifts toward AI platforms.

Core Structural Differences

Intent Type Distinctions

Traditional SEO Keywords optimize for:

- Navigational queries: Brand searches ("Salesforce login")

- Informational queries: "What is content marketing?" (high volume, low conversion)

- Commercial queries: "Best CRM software" (competitive, requires multiple ranking factors)

- Transactional queries: "Buy Slack Business plan" (direct purchase intent)

AEO Keywords target:

- Comparison questions: "Which CRM integrates with HubSpot and Zapier for less than $50/month?"

- Feature-specific queries: "Does Notion support two-way Google Calendar sync?"

- Use-case questions: "How do remote teams use Asana for sprint planning?"

- Implementation guidance: "What's the best way to migrate from Trello to Monday.com without losing data?"

The strategic difference: SEO keywords optimize for rankable terms with measurable volume. AEO keywords target the specific questions AI platforms answer, often with no volume data but higher conversion intent.

"AnswerThePublic and AlsoAsked map the question clusters people actually type."

r/SEO, Reddit Thread

Optimization Approach Differences

Traditional SEO Tactics:

- Target high-volume keywords with manageable competition (KD scores 20-40)

- Build backlinks to improve domain authority

- Optimize title tags, meta descriptions, and URL structure

- Achieve top-10 SERP rankings for target keywords

- Focus on click-through rate (CTR) optimization

AEO Tactics:

- Target question clusters (hundreds of related queries per topic)

- Create comprehensive, answer-ready content with Q&A formatting

- Implement structured data (FAQ schema, HowTo schema, DefinedTerms)

- Optimize for citation-worthiness (authoritative, quotable answers)

- Prioritize zero-click mentions over traffic volume

The measurement divergence is stark: traditional SEO tracks rankings and organic clicks; AEO tracks Share of Answers (how often your brand appears across AI platforms) and citation frequency within AI-generated responses.

The Negative Correlation Problem

Research comparing ChatGPT citations with Google's top organic results revealed a startling finding: only 8-12% of URLs overlap between the two systems. For commercial queries, there was a negative correlation (r ≈ -0.98), meaning the more ChatGPT preferred a URL, the less likely Google was to rank it highly.

This creates a strategic dilemma: optimizing for traditional SEO rankings may actively hurt AEO performance for commercial queries. AI platforms prioritize:

✅ Comprehensive, detailed content (3,000+ words covering every product nuance)

✅ Structured answer formats (tables, bullet lists, explicit Q&A sections)

✅ Trust signals (author expertise, cited sources, E-E-A-T indicators)

Traditional SEO often prioritizes:

❌ Keyword density and exact-match targeting

❌ Concise content focused on single ranking terms

❌ Backlink quantity over content depth

Practical Implications for Keyword Research

Traditional SEO Keyword Research Process:

- Identify seed keywords with search volume

- Analyze keyword difficulty (KD) and CPC

- Target 10-20 keywords per page

- Optimize content for rankability

- Track SERP positions weekly

AEO Keyword Research Process:

- Identify question clusters around topics (not individual keywords)

- Map customer conversation patterns (sales calls, support tickets)

- Target 100-300 related questions per landing page

- Optimize content for answer extraction and citation-worthiness

- Track Share of Answers across multiple AI platforms

"Most people are still doing manual checks because the tooling is pretty limited right now."

r/SEO, Reddit Thread

The keyword research methodology must shift from volume-based selection (which keywords get 1,000+ monthly searches?) to intent-based validation (which questions do high-intent buyers ask AI platforms?).

How MaximusLabs AI Navigates This Transition

MaximusLabs AI recognizes that traditional SEO and AEO require strategically separated approaches. Rather than treating AEO as "SEO plus schema markup," we architect content specifically for AI retrieval systems:

- Dual-optimization frameworks that prevent negative correlation effects

- Question-first content structures formatted as actual user queries (H2/H3 headings)

- Answer-ready formatting with 40-60 word quotable blocks optimized for LLM extraction

- Intent validation methodologies that prioritize conversion potential over vanity volume metrics

The strategic insight: AEO keywords aren't "longer SEO keywords." They represent a fundamentally different search paradigm requiring purpose-built research frameworks, content architectures, and measurement systems. Organizations still applying SEO keyword tactics to AI platforms will consistently underperform competitors who recognize this structural difference.

Q3. What Types of Questions Should You Target for Maximum AEO Impact? [toc=Question Type Strategy]

The most critical strategic decision in AEO keyword research isn't which tools to use. It's which question types to target based on your resources, competitive positioning, and conversion goals. Not all questions deliver equal ROI, and pursuing the wrong question categories consumes resources while generating minimal revenue impact.

The strategic framework that separates high-performing AEO programs from failed experiments: Owned vs. Earned AEO bifurcation.

The Strategic Question Typology

Head Questions (High-volume, competitive, generic):

- "Best project management software"

- "Top CRM tools for small business"

- "What is marketing automation?"

Mid-Tail Questions (Moderate specificity, use-case focused):

- "Which project management software integrates with Slack?"

- "CRM tools with built-in email marketing for under $100/month"

- "How to set up marketing automation workflows for e-commerce"

Long-Tail Questions (High specificity, feature/implementation focused):

- "Which project management software offers two-way Slack sync for distributed teams under 50 people?"

- "Does HubSpot CRM support custom pipeline stages for B2B SaaS sales cycles?"

- "How do I migrate Mailchimp automation workflows to ActiveCampaign without losing subscriber data?"

The conversion rate difference between these question types is dramatic, yet traditional agencies consistently recommend the opposite strategy.

The TOFU Content Trap: Why Traditional Agencies Get This Wrong

Traditional SEO agencies default to recommending head questions and Top-of-Funnel (TOFU) content because it's familiar territory: "What is..." articles, "Best..." listicles, and broad comparison guides that target high-volume keywords.

The flawed logic: More traffic equals more conversions. Target "best CRM software" (10,000+ monthly searches on Google) rather than "Does Pipedrive support Zapier webhooks for custom integrations?" (unmeasurable AI platform volume).

Why this strategy fails for AEO:

Problem 1: AI Commoditizes Generic Answers

ChatGPT, Perplexity, and Google AI Overviews answer generic "What is..." questions better than most brands can. AI synthesizes information from dozens of sources to create comprehensive answers. Your single article cannot compete with this collective intelligence.

Problem 2: TOFU Questions Drive Low-Intent Traffic

Users asking "What is content marketing?" are in early research phases, months away from purchase decisions. Even if you capture this traffic, conversion rates remain sub-1% because visitors aren't evaluating solutions yet.

Problem 3: Head Questions Require Earned AEO (Not Owned Content)

Winning citations for "best project management software" requires being mentioned by authoritative third-party sources: review sites (G2, Capterra), affiliate content, industry media, community platforms (Reddit). Your owned content claiming "we're the best" won't get cited; third-party validation will.

"Create content similar to what is being cited, basically, create my own listicle articles around my target prompts, with my brand mentioned prominently within the article." r/SEO, Reddit Thread

Traditional agencies invest client resources creating TOFU content that generates impressive pageview metrics but minimal conversions, a vanity metric trap that wastes budgets.

The AI-Era Strategic Bifurcation: Owned vs. Earned AEO

The question type dictates the strategic approach required:

Earned AEO Strategy (for head questions):

- Definition: Getting cited/mentioned by authoritative third-party sources

- Target questions: "Best [category]," "Top [solution] for [broad use case]"

- Required tactics:

- Citation engineering (identifying top-cited URLs and getting featured)

- Affiliate relationship development

- Media coverage and industry awards

- User-generated content (UGC) cultivation on review platforms

- Community engagement (Reddit, Quora, industry forums)

- Timeline: 6-12 months to establish third-party mention ecosystem

- Investment: Higher (requires PR, partnerships, review generation programs)

Owned AEO Strategy (for mid/long-tail questions):

- Definition: Creating comprehensive, feature-rich content on owned URLs that AI platforms cite directly

- Target questions: Feature comparisons, integration details, use-case guidance, implementation steps

- Required tactics:

- Help Center optimization (detailed product documentation)

- Feature comparison pages (structured tables)

- Use-case guides (industry-specific implementations)

- Integration documentation (step-by-step technical guides)

- Timeline: 2-4 months to implement and see initial citations

- Investment: Lower (leverages existing product knowledge and internal resources)

The strategic insight: Head questions are won through reputation engineering across the web; mid/long-tail questions are won through comprehensive owned content that answers every product nuance.

MaximusLabs AI's BOFU Commitment: Targeting Questions That Convert

MaximusLabs AI takes a contrarian position: deliberately exclude TOFU content and aggressively prioritize Bottom-of-Funnel (BOFU) and Middle-of-Funnel (MOFU) questions that target features, integrations, use cases, and comparison queries.

Why this strategy delivers 6x higher conversion rates:

1. Feature-Focused Questions Signal Purchase Intent

"Does [product] support [specific feature]?" = user evaluating final options

"What is [category]?" = user beginning research (months from purchase)

2. Integration Questions Indicate Active Evaluation

"Which [tools] integrate with [existing stack]?" = user checking compatibility

"Best [category] tools" = user browsing options casually

3. Use-Case Questions Reveal Implementation Planning

"How do [specific personas] use [product] for [specific outcome]?" = user envisioning deployment

"[Category] benefits" = user exploring concepts

4. Comparison Questions Represent Final Decision Stage

"[Product A] vs [Product B] for [specific use case]" = user narrowing to 2-3 options

"Top [category] software" = user compiling initial list

"AI-driven search is growing rapidly, and being visible in these tools can significantly increase traffic and leads. Users coming from AI tools already trust the brand before they even land on the site." r/SEO, Reddit Thread

The BOFU Commitment philosophy: If a question doesn't indicate near-purchase intent, it's not worth targeting in an AEO program focused on revenue generation rather than vanity traffic metrics.

Implementation Insight: Topic Clusters vs. Individual Keywords

Traditional SEO targets 10-20 keywords per page. AEO requires targeting hundreds of related questions within a topic cluster on a single landing page.

Example: Project Management Software Product Page

Traditional SEO Approach (10 keywords):

- "project management software"

- "project management tool"

- "best project management app"

- "project management platform pricing"

AEO Approach (200+ question cluster):

- "Which project management software integrates with Slack for real-time notifications?"

- "Does [Product] support Gantt charts for Agile sprint planning?"

- "How do I set up custom workflows in [Product] for marketing teams?"

- "Can [Product] track time automatically across multiple projects?"

- "What's the difference between [Product] Business and Enterprise plans for SOC 2 compliance?"

- [195+ additional feature, integration, use-case, and implementation questions]

The content implication: Money pages must become longer, more thorough, more detailed to answer every product nuance that bottom-funnel buyers ask AI platforms during evaluation.

High-ROI AEO Channels for Owned Strategy:

✅ Help Centers (subdirectory, not subdomain): Naturally contain detailed answers to feature/implementation questions

✅ Product Documentation: Technical integration guides and API references

✅ Use-Case Pages: Industry-specific implementation examples

✅ Comparison Pages: Feature-by-feature tables vs. named competitors

✅ Pricing/Plan Pages: Detailed capability breakdowns per tier

These channels convert AI-referred traffic at dramatically higher rates than blog content targeting TOFU questions, yet traditional agencies consistently under-invest in them because they lack blog post volume metrics.

MaximusLabs AI's strategic discipline: avoid the TOFU trap, focus resources on BOFU question clusters, and architect content for the high-intent questions that convert AI-referred traffic into revenue.

Q4. Where Do You Find AEO Keyword and Question Opportunities? [toc=Discovery Sources]

Identifying high-impact AEO questions requires fundamentally different research channels than traditional SEO keyword discovery. While Google Keyword Planner and Semrush reveal search volume data, AEO keyword research demands mining conversational intent patterns from sources where customers articulate problems in their own language, unfiltered by search engine query compression.

The strategic advantage: most competitors still rely exclusively on traditional keyword tools, leaving valuable question clusters undiscovered.

.png)

Primary AEO Question Discovery Sources

1. Customer Conversation Mining (Highest Intent)

Your existing customer interactions contain the most valuable AEO question clusters. These are the actual questions prospects ask before purchasing and customers ask during implementation.

Internal Data Sources:

- Sales Call Transcripts: Record and analyze discovery calls, demos, and objection-handling conversations. Questions prospects ask during sales cycles reveal bottom-funnel evaluation criteria AI platforms should answer.

- Support Ticket History: Customer support tickets expose post-purchase implementation questions, feature clarifications, and troubleshooting needs, the long-tail questions that convert AI-referred traffic.

- Customer Success Call Recordings: Onboarding and quarterly business review (QBR) calls reveal use-case patterns and feature adoption questions.

- Chat Logs: Live chat and Intercom conversations capture real-time questions in customers' exact phrasing.

Extraction Method: Use conversation intelligence tools (Gong, Chorus.ai) or manually review recent calls, identifying recurring question patterns. Prioritize questions asked by ICPs (Ideal Customer Profiles) in your target segments.

Example Questions Discovered:

- "How do I set up [specific workflow] without needing developer resources?"

- "Can [product] handle [edge case scenario] for [specific industry]?"

- "What's the fastest way to migrate from [competitor] to [your product]?"

"Write content that is meaningful, by that I mean use real examples, results, metrics."

r/SEO, Reddit Thread

2. Community Platform Question Mining

Reddit, Quora, industry-specific forums, and Slack communities reveal unfiltered customer pain points and solution evaluation questions.

Key Communities by Vertical:

- SaaS/B2B: r/SaaS, r/Entrepreneur, r/startups, Product Hunt discussions

- Marketing: r/DigitalMarketing, r/SEO, r/PPC, GrowthHackers

- Development Tools: r/webdev, r/programming, Stack Overflow, GitHub Discussions

- E-commerce: r/shopify, r/ecommerce, Shopify Community Forums

Research Approach:

- Search "[your product category] Reddit" or "[competitor name] vs [category] Reddit"

- Identify recurring complaint themes and feature request patterns

- Extract question phrasings (exact language users employ)

- Prioritize questions with multiple upvotes/comments (indicates widespread interest)

Example Discoveries:

- "All the AEO guides suck" (27 upvotes) reveals frustration with existing content quality, indicating opportunity for definitive guide

- "Most people are still doing manual checks because the tooling is pretty limited right now" reveals tracking methodology questions

"I also use AnswerThePublic, AlsoAsked for generating topic clusters on most frequently asked questions."

r/SEO, Reddit Thread

3. SERP Feature Mining (Question Expansion)

Google's SERP features, particularly People Also Ask (PAA) boxes and Related Searches, reveal question clusters around seed topics, even though the volume targets Google rather than AI platforms.

Tools for PAA Extraction:

- AlsoAsked ($15-49/month): Visualizes PAA question trees, showing how questions branch into sub-questions

- AnswerThePublic (Free tier available): Generates question clusters from Google autocomplete data organized by interrogative (how, what, why, when, where, who, which)

- Keywords Everywhere (Chrome extension, ~$10): Displays PAA boxes and related searches directly in search results

Methodology:

- Enter seed topic (e.g., "project management software")

- Extract all PAA questions (typically 4-8 initial questions)

- Click each PAA to expand additional questions (creates question chains)

- Map question patterns (comparisons, features, pricing, implementation)

- Translate Google-optimized phrasing into conversational AI query format

Translation Example:

- Google PAA: "project management software with gantt charts"

- AI Query: "Which project management tools offer built-in Gantt chart functionality for Agile sprint planning?"

4. Competitor Content Gap Analysis

Analyze which questions competitors are and aren't answering comprehensively on their owned properties.

Analysis Process:

- Identify top 3-5 direct competitors

- Audit their Help Centers, product documentation, and blog content

- Map covered topics vs. missing topics

- Identify superficial answers (opportunities to create definitive resources)

- Extract specific questions they answer poorly

Competitive Intelligence Questions:

- Which integration questions do competitors' help centers not address?

- What implementation steps do competitors' documentation skip?

- Which comparison angles do competitors avoid discussing?

5. AI Platform Direct Discovery

Use AI platforms themselves to discover question patterns and follow-up queries.

Research Method:

- Ask ChatGPT, Perplexity, or Claude seed questions about your product category

- Note which follow-up questions the AI generates

- Identify which sources get cited most frequently

- Analyze question phrasing patterns AI platforms use internally

Example Prompt: "I'm evaluating project management software for a remote team of 25 people. What should I consider?"

AI-Generated Follow-ups (these become target questions):

- "Does the tool support asynchronous communication for distributed teams?"

- "What integrations are essential for remote team collaboration?"

- "How do teams track time across different time zones?"

6. Search Console Query Analysis (Hybrid SEO-AEO)

Google Search Console reveals actual queries driving traffic to your site, many of which indicate question-based intent patterns.

GSC Mining Approach:

- Export queries with impressions >100, clicks >0

- Filter for question keywords (how, what, which, why, when, where, can, does, is)

- Identify high-impression, low-CTR queries (opportunity to improve answer quality)

- Group related queries into topic clusters

Signal: If Google search users ask these questions, AI platform users likely ask more detailed versions.

"Google Search Console is surprisingly underrated."

r/SEO, Reddit Thread

7. Competitor Paid Search Intelligence

Analyze competitor Google Ads campaigns to identify high-commercial-intent question patterns they're bidding on.

Tools:

- Semrush: PPC Keyword tool reveals competitors' paid keywords

- SpyFu: Historical ad copy and landing page analysis

- Ahrefs: PPC Keywords report shows competitors' paid search strategy

Strategic Insight: If competitors pay for a keyword/question, it converts. Use this as a proxy for commercial intent validation when AI platform volume data is unavailable.

Question Inference: Translate paid keywords into conversational questions. If a competitor bids on "project management Slack integration," the AEO question is "Which project management software has the most reliable Slack integration?"

Practical Workflow: Combining Sources for Maximum Coverage

Step 1: Start with customer conversation mining (sales calls, support tickets): 30-50 high-intent questions

Step 2: Expand via PAA/AlsoAsked around discovered themes: 100-150 related questions

Step 3: Cross-reference competitor gaps: identify 20-30 unanswered questions

Step 4: Validate commercial intent via competitor paid search: prioritize questions competitors bid on

Step 5: Organize into topic clusters: group 100-300 questions per landing page topic

Industry-Specific Mining Techniques:

SaaS Products: Focus on integration questions (Zapier, Slack, Google Workspace), API capabilities, security/compliance (SOC 2, GDPR), and implementation timelines.

E-commerce: Target product comparison questions, sizing/fit guidance, shipping/return policies, and product compatibility questions.

B2B Services: Prioritize implementation methodology questions, ROI timeline inquiries, team size requirements, and case study-specific questions.

The strategic differentiation: while traditional agencies mine Google Keyword Planner for volume data, MaximusLabs AI mines actual customer conversations and competitive intelligence to identify the specific questions high-intent buyers ask AI platforms, questions that drive conversions rather than vanity traffic.

This customer-first discovery methodology uncovers question clusters competitors miss because they're not visible in traditional keyword tools, creating sustainable competitive advantages in AI platform visibility.

Q5. What Are the Best AEO Keyword Research Tools in 2025? (Free, Budget & Enterprise) [toc=Best AEO Tools]

The AEO keyword research tool landscape differs significantly from traditional SEO platforms. While tools like Google Keyword Planner excel at providing search volume data for Google queries, AEO requires identifying question clusters, conversational patterns, and AI platform retrieval signals, capabilities most traditional tools weren't designed to deliver.

The strategic challenge: No single tool provides comprehensive AEO keyword data. Effective research requires combining multiple platforms across budget tiers to build a complete question discovery workflow.

.png)

Free AEO Keyword Research Tools

1. Google People Also Ask (PAA) Extraction

What It Does: Reveals question clusters Google associates with seed topics, displayed directly in SERP results.

Use Case: Seed-level question discovery; identifying initial question branches around topics.

Methodology:

- Enter seed keyword in Google search

- Extract 4-8 initial PAA questions

- Click each PAA to expand secondary questions (creates question chains)

- Manually document or use browser extensions to capture questions

Limitations: Google-centric (doesn't reflect ChatGPT/Perplexity query patterns); manual extraction is time-intensive.

Best For: Initial brainstorming and validating question clusters exist around topics.

"AnswerThePublic and AlsoAsked map the question clusters people actually type."

r/SEO, Reddit Thread

2. AnswerThePublic (Free Tier)

What It Does: Generates question clusters organized by interrogative (how, what, why, when, where, who, which) based on Google autocomplete data.

Pricing: Free tier (2 searches/day); Pro starts at $99/month.

Use Case: Visualizing question patterns; discovering comparison and preposition-based queries.

Strengths:

- Question visualization (circular graph showing all interrogative patterns)

- Exports to CSV for analysis

- Identifies comparison queries ("X vs Y") and preposition phrases ("X for Y")

Limitations: Limited to Google autocomplete data; free tier heavily restricts search volume.

Best For: Budget-conscious teams needing visual question mapping for initial discovery.

Mid-Tier Tools ($50-300/Month)

3. AlsoAsked ($15-49/month)

What It Does: Maps PAA question trees, showing how questions branch into sub-questions across multiple levels.

Use Case: Question expansion; understanding how single topics spawn hundreds of related queries.

Key Features:

- Multi-level PAA expansion (3-4 levels deep)

- Question relationship visualization

- Export to spreadsheet for clustering

- Tracks question patterns over time

Strengths: Reveals question fan-out patterns traditional keyword tools miss; affordable entry point for AEO research.

Limitations: Still Google-based; doesn't capture ChatGPT-specific query variations.

Best For: Teams building comprehensive question clusters for topic pages.

"I also use Answerthepublic, AlsoAsked for generating topic clusters on most frequently asked questions."

r/SEO, Reddit Thread

4. Semrush (Starting $139.95/month)

What It Does: Comprehensive SEO platform with emerging AEO/GEO visibility tracking features.

Use Case: Hybrid SEO/AEO research; tracking both traditional rankings and AI platform mentions.

AEO-Relevant Features:

- Keyword Magic Tool (23B+ keyword database with question filters)

- AI and GEO visibility tracking (beta features)

- Content suggestions based on competitive analysis

- Topic Research tool for question clustering

Strengths: Single platform combining traditional SEO metrics with emerging AI tracking; robust competitive intelligence.

Limitations: AEO features still developing; primarily Google-focused data.

"Semrush feels like the most complete package for keyword research, audits, and tracking shifts in search including AI and GEO stuff." r/SEO, Reddit Thread

Best For: Teams requiring comprehensive SEO + AEO data in unified platform; enterprises with existing Semrush licenses.

5. Ahrefs (Starting $129/month)

What It Does: Backlink and keyword research platform with question keyword filtering.

Use Case: Competitive question analysis; identifying which questions drive backlinks to competitor content.

AEO-Relevant Features:

- Questions report (filters keywords by interrogative structure)

- Content Explorer (finds top-performing content answering specific questions)

- Keyword difficulty scores for question-based queries

Strengths: Superior backlink data reveals which questions attract authoritative citations (important for Earned AEO).

Limitations: No native AI platform tracking; Google-centric metrics.

Best For: Teams prioritizing competitive analysis and link-building opportunities for question content.

Specialized AEO/AI Tracking Tools

6. Perplexity Keyword Tool (Free)

What It Does: Keyword research tool specifically designed for understanding Perplexity AI query patterns.

Use Case: Platform-specific optimization for Perplexity citations.

Features: Query suggestion based on Perplexity's search data; identifies trending questions within the platform.

Best For: Brands targeting Perplexity visibility specifically; free entry point for AI-native keyword research.

7. AI Traffic Analytics & Citation Trackers

What They Do: Monitor brand mentions and citations across ChatGPT, Perplexity, Google AI Overviews, and other platforms.

Emerging Tools: Multiple vendors entering market with synthetic mention tracking and sentiment analysis.

Use Case: Measuring Share of Answers; tracking which content gets cited by AI platforms.

Current State: Early-stage tooling; most are "basic synthetic mention tracking or sentiment analysis" according to practitioners.

"Most people are still doing manual checks because the tooling is pretty limited right now."

r/SEO, Reddit Thread

Best For: Enterprises requiring systematic AI visibility tracking; expect manual validation of automated data.

Content Optimization & Schema Tools

8. Frase & SurferSEO ($45-99/month)

What They Do: Content optimization platforms that structure content for "snippet-ready" formatting.

Use Case: Optimizing existing content for answer extraction; ensuring AI-friendly formatting.

Features:

- Answer-ready content templates

- Competitive content analysis

- Question extraction from top-ranking content

"SurferSEO or Frase for structuring content so its scannable and snippet-ready."

r/SEO, Reddit Thread

9. Merkle's Schema Markup Generator (Free)

What It Does: Creates structured data (FAQ schema, HowTo schema, Article schema) for content.

Use Case: Technical implementation of answer-ready formatting; helping AI understand content structure.

"For schema and structured data, Merkles schema markup generator is easy to use."

r/SEO, Reddit Thread

Enterprise & Custom Solutions

10. Custom Conversation Intelligence Integration

What It Is: Connecting internal data sources (Gong, Chorus.ai, Intercom) to extract customer question patterns.

Use Case: Discovering proprietary question clusters from actual customer conversations.

Implementation: Requires engineering resources to extract and analyze transcript data; highest-intent question source available.

Best For: B2B SaaS companies with existing conversation intelligence platforms; uncovers questions competitors can't access.

How MaximusLabs AI Maximizes Tool Stack Efficiency

Rather than relying on single-platform solutions, MaximusLabs AI implements a layered discovery workflow that combines free tools for breadth, mid-tier platforms for depth, and proprietary customer data for intent validation. This eliminates the "tool paralysis" many teams face while ensuring comprehensive question coverage across all AI platforms, not just Google-optimized queries.

Q6. How Do ChatGPT, Perplexity, and Google AI Overviews Differ for Keyword Research? [toc=Platform-Specific Strategies]

AI answer engines operate on fundamentally different retrieval architectures, ranking signals, and display formats. Treating ChatGPT, Perplexity, and Google AI Overviews as interchangeable platforms, a common mistake among traditional agencies, results in suboptimal performance across all three. Each platform requires tailored keyword strategies aligned with its unique technical infrastructure and user behavior patterns.

The strategic implication: Platform-specific optimization is no longer optional. With only 8-12% URL overlap between ChatGPT citations and Google rankings, content optimized exclusively for one platform underperforms on others.

The One-Size-Fits-All Trap: Why Generic AEO Fails

Traditional SEO agencies default to applying the same tactical checklist across all AI platforms: "Add FAQ schema, include question headings, write longer content." This superficial approach ignores critical platform distinctions in how AI systems retrieve, evaluate, and display content.

The flawed assumption: If content ranks well for Google AI Overviews, it will automatically perform well in ChatGPT and Perplexity. Research demonstrates the opposite. There's a negative correlation (r ≈ -0.98) for commercial queries between ChatGPT preferences and Google rankings.

Why platform-agnostic strategies fail:

ChatGPT optimizes for: Comprehensive narrative depth (3,000+ word resources covering every product nuance)

Perplexity optimizes for: Structured citation-worthy content (explicit source attribution, quotable data points)

Google AI Overviews optimize for: Existing SEO equity + answer-ready snippets (domain authority combined with featured snippet formatting)

Agencies treating these as identical miss fundamental architectural differences in how each platform evaluates content authority, extracts answers, and attributes sources.

Platform-Specific Retrieval & Display Characteristics

ChatGPT Search Behavior

Query Length Preference: 15-25 words (long-form conversational queries)

Example Query: "Which project management software offers two-way Slack sync, custom workflow automation, and time tracking for distributed teams under 50 people with SOC 2 compliance?"

Retrieval Pattern:

- Performs multiple grounding searches (internal queries LLM runs to gather information)

- Synthesizes information from 10-20 sources

- Rarely displays explicit source citations in standard ChatGPT (Search mode differs)

Content Preferences:

- Authoritative narrative answers (detailed explanations with context)

- Comprehensive product documentation and Help Center depth

- Technical implementation guides with step-by-step instructions

- Use-case examples showing application across scenarios

Optimization Strategy:

✅ Create exhaustive resources answering every product nuance

✅ Optimize Help Centers and product documentation (naturally contain long-tail technical questions)

✅ Use clear heading hierarchy with questions as H2/H3 tags

✅ Include implementation details, not just feature descriptions

Perplexity Search Behavior

Query Length Preference: 8-15 words (medium conversational queries)

Example Query: "Best CRM with native email marketing for under $100 per month"

Retrieval Pattern:

- Displays 5-10 explicit source citations per answer

- Users can click through to original sources

- Citation visibility = competitive advantage

Content Preferences:

- Structured answer formats (bullet lists, comparison tables, numbered steps)

- Quotable data points and statistics

- Clear attribution-friendly content (easily extracted facts)

- Feature comparison pages with structured data

Optimization Strategy:

✅ Create citation-worthy comparison tables (feature-by-feature breakdowns)

✅ Lead with quotable statistics and data points

✅ Use FAQ schema and structured data markup

✅ Format answers in 40-60 word extractable blocks

"Create content similar to what is being cited, basically, create my own listicle articles around my target prompts, with my brand mentioned prominently within the article." r/SEO, Reddit Thread

Google AI Overviews Search Behavior

Query Length Preference: 3-8 words (shorter traditional queries)

Example Query: "project management software Slack integration"

Retrieval Pattern:

- Integrates with traditional SERP results

- Pulls from already-ranking content (typically positions 1-10)

- Displays featured snippet-style answers

Content Preferences:

- Domain authority and existing SEO equity

- Featured snippet-optimized formatting

- Traditional E-E-A-T signals (author bios, citations, expert credentials)

Optimization Strategy:

✅ Leverage existing Google rankings with answer-ready formatting upgrades

✅ Target featured snippet capture with concise paragraph answers

✅ Strengthen E-E-A-T signals (author expertise, cited sources)

✅ Maintain traditional SEO best practices (backlinks, technical optimization)

The 8-12% Overlap Problem: Why Separate Strategies Matter

Research comparing ChatGPT citations with Google's top organic results revealed minimal overlap: only 8-12% of URLs appear in both systems. For commercial queries, the negative correlation means optimizing exclusively for Google rankings may actively harm ChatGPT visibility.

Strategic Implications:

Content Length Paradox:

- Google prefers 1,500-2,000 word focused articles targeting specific keywords

- ChatGPT prefers 3,000+ word comprehensive resources covering topic clusters

- Solution: Create platform-specific content or hybrid architectures addressing both needs

Citation Attribution Differences:

- Perplexity explicitly displays source URLs (drives direct traffic)

- ChatGPT synthesizes without standard citation (drives branded search/direct traffic)

- Google AI Overviews integrate with SERP results (drives organic clicks)

- Solution: Track Share of Answers separately per platform; accept different attribution models

Authority Signal Variations:

- Google weighs backlink profiles heavily

- ChatGPT prioritizes content comprehensiveness and recency

- Perplexity balances domain authority with content structure

- Solution: Multi-signal optimization rather than backlink-exclusive strategies

MaximusLabs AI's Search Everywhere Optimization Approach

MaximusLabs AI addresses platform fragmentation through adaptable content architecture that simultaneously targets multiple AI platforms without requiring separate content versions for each.

Simultaneous Multi-Platform Targeting:

For ChatGPT: Comprehensive product documentation and Help Center depth covering every feature, integration, and use case, satisfying the platform's preference for exhaustive narrative answers.

For Perplexity: Structured comparison pages with feature-by-feature tables, quotable statistics, and FAQ sections formatted for easy citation extraction.

For Google AI Overviews: Existing SEO equity reinforcement plus answer-ready formatting upgrades (featured snippet optimization, structured data implementation, E-E-A-T strengthening).

Technical Implementation:

- Question-as-heading hierarchy (H2/H3 formatted as actual user questions)

- Dual-format content blocks (narrative paragraphs for ChatGPT + bullet summaries for Perplexity)

- Schema markup implementation (FAQ, HowTo, DefinedTerm)

- Clean HTML architecture (no JavaScript rendering dependencies)

The strategic advantage: Rather than choosing which platform to prioritize, MaximusLabs AI architectures content for cross-platform compatibility, maximizing total Share of Answers across the entire AI search ecosystem.

Platform-Specific Performance Tracking:

Track citations separately per platform to identify optimization opportunities:

- ChatGPT Share of Answers (mention frequency in synthesized responses)

- Perplexity citation rate (explicit source list appearances)

- Google AI Overview inclusion (percentage of target queries triggering overviews with brand mention)

The 8-12% overlap validates the need for platform-specific strategies. Organizations optimizing for single platforms, typically Google due to familiarity, miss 88-92% of AI search opportunities where different content characteristics win citations.

Q7. How Do You Validate Which AEO Keywords Will Actually Drive Revenue? [toc=Intent Validation]

The most challenging aspect of AEO keyword research is the AEO Volume Delusion, the absence of reliable, publicly available query volume data for ChatGPT, Perplexity, and other AI platforms. Unlike traditional SEO, where Google Keyword Planner provides directionally accurate search volume estimates, no equivalent API or data set exists for LLM query patterns.

This data gap forces a fundamental strategic shift: from volume estimation (which keywords get 10,000 monthly searches?) to intent validation (which questions do high-intent buyers ask before purchasing?).

The competitive advantage: While traditional agencies guess at question importance based on unreliable proxies, sophisticated teams implement systematic intent validation frameworks using actual customer data and competitive intelligence.

Traditional Agencies' Speculative Validation Approach

Most SEO agencies default to three flawed validation methods when transitioning to AEO:

Flawed Method 1: Google PAA Appearance Frequency

Logic: "If a question appears in Google's People Also Ask boxes frequently across related queries, it must be important for AEO."

Why It Fails: Google PAA reflects Google's algorithm priorities, not ChatGPT or Perplexity retrieval patterns. The 8-12% URL overlap between platforms proves Google signals are unreliable proxies for AI platform performance.

Flawed Method 2: Social Listening Volume

Logic: "Questions mentioned frequently on Reddit, Twitter, or forums indicate high search volume on AI platforms."

Why It Fails: Social media mentions reflect viral topics and discussion trends, not transactional purchase intent. High social volume often correlates with low conversion potential (informational curiosity rather than buying behavior).

Flawed Method 3: Content Gap Analysis Alone

Logic: "If competitors aren't answering a question comprehensively, it's an AEO opportunity."

Why It Fails: Competitors may not address questions because those questions lack commercial value. Uncovered questions might represent low-intent informational queries, not conversion-driving searches.

"Most people are still doing manual checks because the tooling is pretty limited right now."

r/SEO, Reddit Thread

These speculative approaches waste resources targeting questions that generate citations but minimal revenue, vanity metrics that don't influence pipeline.

The AI-Era Validation Paradigm: Conversion Potential Over Volume

Effective AEO validation shifts focus from unknowable volume metrics to provable conversion signals:

Validation Signal 1: Competitor Paid Search Investment

If competitors bid on specific keywords or questions in Google Ads, those terms convert. Use competitor PPC campaigns as a proxy for commercial intent.

Tools: Semrush PPC Keyword tool, SpyFu, Ahrefs PPC Keywords report

Methodology:

- Identify top 3-5 direct competitors

- Extract their paid search keywords

- Translate paid keywords into conversational questions

- Prioritize questions competitors invest ad spend on

Example Translation:

- Competitor PPC Keyword: "project management software Slack integration"

- AEO Question: "Which project management tools offer real-time two-way Slack sync?"

Validation Signal 2: Question Specificity (Long-Tail = Higher Intent)

Detailed, multi-part questions signal near-purchase evaluation behavior.

High-Intent Pattern: "Does [Product] support [specific feature] for [specific use case] with [specific constraint]?"

Low-Intent Pattern: "What is [broad category]?"

Example Comparison:

✅ High Intent: "Can Notion handle database views with rollup formulas for project cost tracking?"

❌ Low Intent: "What is project management software?"

The more specific the question, the closer the asker is to a purchase decision.

Validation Signal 3: Customer Support Ticket Frequency

Questions customers ask post-purchase reveal critical pre-purchase evaluation criteria.

Methodology:

- Analyze support ticket history (past 6-12 months)

- Identify recurring question themes

- Categorize by funnel stage (implementation questions often reflect pre-purchase concerns)

- Prioritize questions asked by ICP segments

Insight: If customers repeatedly ask "How do I migrate from [Competitor] to [Your Product]?" post-purchase, prospects ask this pre-purchase, making it a high-value AEO target.

MaximusLabs AI's Question Fan-Out Analysis Methodology

MaximusLabs AI addresses the volume data gap through proprietary Question Fan-Out Analysis, mapping how single customer prompts expand into multiple grounding searches that LLMs perform internally.

The Question Fan-Out Concept:

When a user asks ChatGPT: "I need project management software for a remote team of 25 people that integrates with our existing tools (Slack, Google Workspace, Zoom) and costs under $200/month with SOC 2 compliance."

The LLM doesn't search for that exact 32-word query. Instead, it performs multiple grounding searches (concise internal queries):

- "Project management software remote teams"

- "PM software Slack integration"

- "PM software Google Workspace sync"

- "Project management SOC 2 compliance"

- "Project management software pricing under $200"

Question Fan-Out Analysis identifies these grounding search patterns by analyzing:

Source 1: Sales Call Transcripts

- Record discovery calls and demos (using Gong, Chorus.ai, or manual transcription)

- Extract questions prospects ask during evaluation

- Map multi-part questions into component grounding searches

- Prioritize questions asked by deals that closed (conversion validation)

Source 2: Support Ticket History

- Mine support tickets for implementation questions

- Identify feature clarification requests

- Extract integration compatibility questions

- Focus on tickets from ICP customer segments

Source 3: Community Forum Mining

- Analyze Reddit, Quora, industry forums for question patterns

- Identify recurring pain points prospects discuss

- Extract exact phrasing (user language, not marketing language)

- Validate question importance via upvotes/engagement

Output: Validated question clusters organized by conversion potential rather than unknowable volume estimates.

Prioritization Framework:

Tier 1 (Highest Priority): Feature/integration/use-case questions asked during closed-won sales calls

Tier 2: Questions appearing in both support tickets and competitor PPC campaigns

Tier 3: Recurring community questions with demonstrated engagement

Tier 4: Content gap questions with conversion signal validation

Deprioritize: TOFU questions lacking commercial intent signals

Measurement Framework: Beyond Vanity Metrics

Traditional SEO tracks rankings and organic clicks. AEO requires fundamentally different KPIs:

Primary Metric: Share of Answers

Definition: Percentage of target questions where your brand receives citations/mentions across AI platforms.

Calculation: (Questions citing your brand / Total target questions tested) × 100

Tracking Method: Manual checks or emerging AI citation tracking tools; test same question set monthly.

Secondary Metric: Zero-Click Mention Rate

Definition: Frequency of brand mentions within AI-generated answers (not just source lists).

Value: Zero-click mentions often drive direct traffic or branded search as users open new tabs to visit your site, creating attribution challenges but indicating strong AI visibility.

Tertiary Metric: AI-Referred Conversion Rate

Definition: Conversion rate of traffic identified as AI-referred (often misattributed as direct or branded search).

Tracking Method: Monitor direct traffic spikes and branded search increases following AI optimization efforts; segment by landing page to identify AI-optimized content performance.

The 6x Conversion Rate Difference: LLM-referred traffic converts at dramatically higher rates (Webflow case study) because users arrive pre-qualified. AI has already recommended your brand as a solution match.

"Users coming from AI tools already trust the brand before they even land on the site."

r/SEO, Reddit Thread

The validation framework: Prioritize questions using conversion signals (competitor PPC investment, customer conversation frequency, specificity scoring), then measure success through Share of Answers and conversion rate rather than traffic volume, aligning AEO efforts with revenue outcomes instead of vanity metrics.

Q8. What Is the Step-by-Step AEO Keyword Research Process? (With Live Case Study) [toc=Implementation Process]

Effective AEO keyword research requires a systematic workflow that combines question discovery, intent validation, content mapping, and performance tracking. Unlike traditional SEO's linear "keyword → content → ranking" model, AEO demands iterative cluster-building where single landing pages target hundreds of related questions.

The following process integrates tools, customer data, and competitive intelligence into a repeatable methodology, illustrated through a B2B SaaS company case study achieving 127 ChatGPT citations within 90 days.

.png)

Step 1: Seed Topic Identification & ICP Alignment

Objective: Define 5-10 core topics aligned with your Ideal Customer Profile's buying journey and product value propositions.

Methodology:

- Map product features to customer pain points

- Identify topics prospects research before purchase

- Prioritize BOFU/MOFU topics over TOFU awareness content

- Validate topics against sales call themes

Case Study Example: Mid-market project management SaaS identified seed topics: "Project management Slack integration," "PM software migration," "Remote team collaboration tools," "Project cost tracking solutions."

Output: 5-10 seed topics with clear commercial intent and ICP relevance.

Step 2: Initial Question Discovery (Breadth)

Objective: Generate 50-100 initial questions per seed topic using free and mid-tier tools.

Tool Workflow:

2A. Google PAA Extraction

- Search seed topic in Google

- Extract 4-8 PAA questions

- Click each to expand secondary questions

- Document 20-30 questions per seed topic

2B. AnswerThePublic Question Mapping

- Enter seed topic

- Export questions organized by interrogative (how, what, which, why, when, where)

- Identify comparison queries ("X vs Y") and preposition phrases ("X for Y")

2C. AlsoAsked Question Tree Expansion

- Map PAA question branches 3-4 levels deep

- Visualize how questions spawn sub-questions

- Identify long-tail question patterns

Case Study Progress: Generated 287 initial questions across 5 seed topics using free tools (time investment: ~4 hours).

Step 3: Customer Conversation Mining (Depth + Intent)

Objective: Extract 30-50 high-intent questions from actual customer interactions, questions competitors can't access.

Data Sources:

3A. Sales Call Analysis

- Review 10-15 recent discovery call recordings

- Extract questions prospects asked during demos

- Prioritize questions from closed-won deals

- Note exact phrasing (prospect language)

3B. Support Ticket Mining

- Analyze 6-12 months of support tickets

- Identify feature clarification questions

- Extract integration compatibility questions

- Focus on tickets from ICP segments

3C. Community Platform Research

- Search Reddit for "[product category] + questions"

- Mine Quora for comparison questions

- Extract G2/Capterra review questions

- Document recurring pain point themes

"Write content that is meaningful, by that I mean use real examples, results, metrics."

r/SEO, Reddit Thread

Case Study Progress: Discovered 43 proprietary questions from sales calls and support tickets, none appeared in competitor content (competitive advantage identified).

Step 4: Question Clustering & Topic Mapping

Objective: Organize 100-300 related questions into topic clusters mapped to specific landing pages.

Clustering Methodology:

- Group questions by search intent (comparison, implementation, feature-specific, troubleshooting)

- Map clusters to existing landing pages (product pages, Help Center articles, comparison pages)

- Identify content gaps requiring new page creation

- Prioritize clusters by conversion potential

Question Cluster Example (Project Management Slack Integration):

- "Which PM software offers two-way Slack sync?"

- "How do I set up automatic Slack notifications for task updates?"

- "Can [Product] send daily standup reminders via Slack?"

- "Does [Product] support Slack slash commands?"

- [+47 related integration questions]

Case Study Progress: Organized 287 questions into 8 topic clusters; mapped to 3 existing product pages, 4 Help Center sections, and 1 new comparison page requiring creation.

Step 5: Intent Validation & Prioritization

Objective: Score question clusters by conversion potential using proxy signals (no volume data available).

Validation Checklist:

✅ Competitor PPC Validation: Do competitors bid on related keywords? (Semrush PPC analysis)

✅ Question Specificity Scoring: Long-tail multi-part questions = higher intent

✅ Customer Conversation Frequency: How often do prospects/customers ask this?

✅ Funnel Stage Assessment: BOFU/MOFU questions prioritized over TOFU

Prioritization Tiers:

- Tier 1: Questions appearing in closed-won sales calls + competitor PPC campaigns

- Tier 2: Feature/integration questions from support tickets

- Tier 3: Community questions with high engagement

- Tier 4: Content gap questions without conversion validation

Case Study Progress: Identified 34 Tier 1 questions for immediate content development; deprioritized 89 low-intent TOFU questions.

Step 6: Content Architecture & Implementation

Objective: Create or optimize content with answer-ready formatting targeting question clusters.

Content Requirements:

- Question-as-heading structure (H2/H3 tags formatted as actual user questions)

- 40-60 word extractable answer blocks

- Comparison tables for feature questions

- FAQ sections with schema markup

- Implementation guides with numbered steps

Technical Specifications:

- Clean HTML (no JavaScript rendering dependencies)

- FAQ schema, HowTo schema, DefinedTerm schema

- Internal linking between related questions

- Author expertise signals (bios, credentials)

Case Study Implementation: Optimized 3 product pages (added 150 questions via expanded content sections), created 4 new Help Center articles (67 questions), published 1 comparison page (23 questions). Total content: 240 questions covered.

Timeline: 6 weeks (content creation and technical implementation).

Step 7: Multi-Platform Tracking Setup

Objective: Establish baseline Share of Answers measurement across AI platforms.

Tracking Methodology:

Manual Tracking (Current Standard):

- Create spreadsheet with target questions (50-100 priority questions)

- Test each question monthly in ChatGPT, Perplexity, Google AI Overviews

- Record: Brand mentioned? (Yes/No), Cited in sources? (Yes/No), Mention context (positive/neutral/negative)

- Calculate Share of Answers per platform

Automated Tracking (Emerging Tools):

- AI citation monitoring platforms (synthetic mention tracking)

- Requires manual validation due to tool limitations

"Most people are still doing manual checks because the tooling is pretty limited right now."

r/SEO, Reddit Thread

Case Study Baseline: Pre-optimization = 0 ChatGPT citations for target questions; 2 Perplexity citations; 4 Google AI Overview appearances.

Step 8: Performance Measurement & Iteration

Objective: Track Share of Answers growth and conversion impact over time.

KPI Dashboard:

- Share of Answers (per platform, per question cluster)

- Zero-click mention rate (brand mentioned in synthesized answer vs. just source list)

- Direct traffic growth (proxy for AI-referred traffic)

- Branded search volume (indirect AI attribution)

- Conversion rate by landing page (AI-optimized content performance)

Case Study Results (90-Day Period):

- ChatGPT citations: 0 → 127 (across 240 target questions)

- Perplexity citations: 2 → 83

- Google AI Overview appearances: 4 → 56

- Direct traffic increase: +34% (attributed to AI visibility)

- Conversion rate for AI-optimized pages: 4.7% vs 2.8% site average (+68%)

Iteration Strategy: Expand question coverage to 500+ questions; optimize underperforming clusters; test platform-specific content variations.

MaximusLabs AI systematizes this workflow through proprietary tools that automate question extraction, intent scoring, and performance tracking, eliminating manual spreadsheet management while maintaining strategic oversight of cluster prioritization and content quality.

Q9. How Do You Leverage People Also Ask (PAA) and Voice Search for AEO Keyword Discovery? [toc=PAA & Voice Search]

Google's People Also Ask (PAA) feature and voice search query patterns represent two underutilized data sources for AEO keyword discovery. While neither directly reveals ChatGPT or Perplexity query volume, both expose conversational question structures and semantic relationships that translate effectively to AI platform optimization.

The strategic opportunity: PAA boxes reveal question expansion patterns, how single seed topics branch into hundreds of related queries. Voice search patterns expose natural language phrasing that mirrors how users query AI platforms.

Step 1: Strategic PAA Mining for Question Expansion

Understanding PAA Mechanics

Google's PAA algorithm identifies semantically related questions users frequently ask around seed topics. Each PAA box typically displays 4-8 initial questions, with additional questions loading dynamically when users click to expand answers.

Why PAA Data Translates to AEO:

- Questions reflect actual user information needs (not marketer assumptions)

- Semantic relationships mirror AI grounding search patterns

- Question branching reveals topic cluster structures

- Long-tail variations indicate specificity levels users seek

"AnswerThePublic and AlsoAsked map the question clusters people actually type."

r/SEO, Reddit Thread

PAA Extraction Workflow:

Method 1: Manual PAA Harvesting

- Enter seed keyword/topic in Google search

- Identify PAA box (typically appears after first organic result)

- Document initial 4-8 questions displayed

- Click each question to expand answer AND trigger 2-3 new related questions

- Repeat expansion process for newly revealed questions (creates question trees)

- Map question relationships (parent topic → sub-questions → tertiary questions)

Time Investment: 15-20 minutes per seed topic generates 30-50 questions

Method 2: AlsoAsked Tool Automation ($15-49/month)

- Enter seed topic into AlsoAsked platform

- Generate visual question tree (3-4 expansion levels automatically mapped)

- Export question list to spreadsheet

- Analyze question branching patterns (identifies high-value clusters)

Output Example: "Project management software" expands into:

- Level 1: "What is the best project management software?"

- Level 2: "Which project management software integrates with Slack?"

- Level 3: "Does Asana support two-way Slack sync for notifications?"

- Level 4: "How do I set up Asana Slack integration for automatic updates?"

Method 3: AnswerThePublic Question Mapping (Free tier: 2 searches/day)

- Enter seed keyword

- Generate question visualization organized by interrogative (what, how, which, why, where, when, who)

- Export comparison queries ("X vs Y") and preposition phrases ("X for Y")

- Prioritize questions by category (features, implementation, comparisons)

"I also use Answerthepublic, AlsoAsked for generating topic clusters on most frequently asked questions."

r/SEO, Reddit Thread

Step 2: Translating PAA Questions to AI Query Format

PAA questions are Google-optimized (short, keyword-focused). AI platforms prefer conversational, context-rich phrasing.

Translation Framework:

Translation Rules:

- Add specificity (team size, budget constraints, use case details)

- Convert keyword phrases into complete questions

- Include context that real users would provide

- Extend query length (15-25 words for AI platforms vs. 3-5 for Google)

Step 3: Voice Search Pattern Analysis for Conversational Structure

Voice search queries (Siri, Alexa, Google Assistant) mirror AI platform query patterns. Both use natural language, complete sentences, and conversational phrasing.

Voice Query Characteristics:

- Question format (85%+ of voice searches are questions)

- Local intent modifiers ("near me," location-specific)

- Action-oriented language ("find," "show me," "help me")

- Longer query length (averaging 10-15 words vs. 3-5 for typed searches)

"People ask AI tools completely different questions than they search on Google. Think conversational, like 'What's the best coffee shop near me' instead of 'coffee shop downtown.'" r/SEO, Reddit Thread

Voice Pattern Discovery Methods:

Method 1: Voice Search Autocomplete Mining

- Use mobile device voice search (Google Assistant, Siri)

- Speak seed topic as question: "What is [topic]?"

- Note autocomplete suggestions (reveals common voice query patterns)

- Document phrasing variations and natural language structures

Method 2: Question Structure Pattern Recognition

Analyze PAA and voice search data for recurring interrogative patterns:

High-Value Question Structures for AEO:

- Comparison: "Which [product] is better for [use case] compared to [alternative]?"

- Implementation: "How do I set up [feature] in [product] for [specific outcome]?"

- Feature-Specific: "Does [product] support [specific capability] for [specific scenario]?"

- Troubleshooting: "Why isn't [feature] working when I [specific action]?"

- Qualification: "What [product category] is best for [specific persona] with [constraint]?"

Step 4: Clustering PAA Questions by Intent Type

Organize extracted questions into strategic clusters aligned with funnel stages.

Clustering Framework:

TOFU Questions (Deprioritize for AEO):

- "What is [category]?"

- "Why use [category]?"

- "Benefits of [category]"

MOFU Questions (Medium Priority):

- "Best [product] for [broad use case]"

- "How does [product] work?"

- "[Product] vs [competitor]"

BOFU Questions (Highest Priority):

- "Which [product] integrates with [specific tool]?"

- "Does [product] support [specific feature]?"

- "How much does [product] cost for [specific team size]?"

- "[Product] migration from [competitor]"

Implementation Questions (High Priority):

- "How do I set up [specific workflow] in [product]?"

- "Can [product] handle [edge case]?"

- "What's the fastest way to [specific outcome] using [product]?"

Step 5: Combining PAA Data with Customer Intelligence

PAA provides breadth; customer conversations provide depth and conversion validation.

Validation Workflow:

- Extract 100-150 questions via PAA/AlsoAsked

- Cross-reference against sales call transcripts and support tickets

- Prioritize questions appearing in BOTH PAA and customer conversations

- Flag PAA questions NOT appearing in customer data (potential low-intent traps)

- Add customer-specific questions missing from PAA (competitive advantages)

Output: Validated question clusters combining search demand signals (PAA) with proven conversion intent (customer data).

How MaximusLabs AI Maximizes PAA and Voice Search Intelligence

Rather than treating PAA as a simple question list generator, MaximusLabs AI implements question relationship mapping, analyzing how PAA branches reveal the conceptual structure AI platforms use to organize information. This enables content architecture that answers not just individual questions but entire question sequences users explore during research. Combined with voice search pattern analysis, this creates content that matches both Google SERP features AND AI platform conversational preferences, maximizing visibility across traditional and AI search channels simultaneously.

Q10. How Do You Optimize Content Structure for AEO Keyword Targeting? [toc=Content Structure Optimization]

Identifying the right AEO questions is necessary but insufficient. Content structure determines whether AI platforms can extract, parse, and cite your answers within generated responses. The majority of brands targeting correct questions fail to achieve citations because their content architecture prevents reliable AI parsing.

The performance gap: Properly structured content increases citation rates 3-5x compared to standard article formatting, yet traditional SEO agencies recommend superficial "add FAQ sections" fixes without understanding LLM extraction mechanics.

Why Traditional Content Structure Fails for AI Citations

Traditional SEO agencies approach AEO with cosmetic adjustments: add a FAQ section, include schema markup, write slightly longer content. This surface-level strategy ignores how LLMs actually extract and evaluate information.

Common Agency Mistakes:

Mistake 1: Generic FAQ Additions

Adding FAQ sections without formatting answers for verbatim extraction. LLMs need 40-60 word answer blocks that can be quoted directly, not 300-word paragraphs requiring synthesis.

Mistake 2: Schema Markup Alone

Implementing FAQ schema without corresponding content structure improvements. Schema helps AI understand page organization but doesn't improve answer extraction if content remains unstructured.

Mistake 3: Keyword Stuffing in Headers

Using SEO-optimized headlines ("Project Management Software Integration Guide") rather than actual user questions ("Which project management software integrates with Slack?").

Mistake 4: JavaScript-Dependent Content

Building pages requiring client-side JavaScript rendering for content display. Many AI crawlers struggle with JavaScript, preferring clean, server-rendered HTML.

"FAQ's (people also ask section) comes in AEO, so you have to give the answer, OR mention those questions in your relevant blogs." r/SEO, Reddit Thread

The result: Inconsistent citation rates despite targeting correct questions. Content exists but AI platforms can't reliably extract it.

AI-Era Content Architecture Requirements

LLMs prefer specific content characteristics that facilitate extraction and citation:

Technical Parsing Preferences:

✅ Clean HTML Structure: Server-rendered content with semantic HTML tags (proper heading hierarchy, list elements, table markup)

✅ Explicit Q&A Patterns: Questions formatted as headings (H2/H3) with answers immediately following

✅ Extractable Answer Blocks: Concise 40-60 word answers suitable for verbatim citation

✅ Structured Comparative Data: Tables for feature comparisons, pricing tiers, specification lists

✅ List-Based Information: Bullet points for steps, requirements, benefits, features

❌ JavaScript Rendering Dependencies: Content requiring JS execution to display

❌ Complex Nested Structures: Multi-layer accordions, tabs, or hidden content

❌ Image-Based Text: Information embedded in images rather than extractable HTML

❌ Vague Paragraph Answers: Long narrative blocks without clear answer delineation

"Make sure your page is client-side rendered (no JS needed for rendering)."

r/SEO, Reddit Thread

Content Format Preferences by AI Platform:

ChatGPT: Comprehensive narrative answers (150-200 words) with supporting context; tolerates longer explanations

Perplexity: Structured answers with explicit data points; prefers bullet lists and tables for citation-worthiness

Google AI Overviews: Concise featured snippet-style answers (40-60 words); integrates with existing SERP content

MaximusLabs AI's Answer-Ready Content Architecture

MaximusLabs AI implements systematic content restructuring that transforms standard articles into AI-citation-optimized resources through multi-layer formatting strategies.

Architecture Component 1: Question-as-Heading Hierarchy

Replace SEO-optimized headers with actual user questions.

Traditional Structure (SEO-Optimized):

text

H2: Project Management Software Integrations

H3: Slack Integration Capabilities

H3: Google Workspace Sync Features

AEO-Optimized Structure (Question Format):

text

H2: Which Project Management Software Integrates with Slack?

H3: Does Asana Support Two-Way Slack Sync?

H3: How Do I Set Up Monday.com Slack Notifications?

H3: Can ClickUp Send Daily Standup Reminders via Slack?

Implementation: Format 80%+ of H2/H3 headings as actual questions users ask (interrogative structure: how, what, which, does, can, is).

Architecture Component 2: Verbatim-Quotable Answer Blocks

Structure answers in 40-60 word extractable paragraphs that AI can cite directly.

Example Answer Block (Perplexity-Optimized):

Does Notion support real-time collaboration for remote teams?

Yes, Notion supports real-time collaboration with live cursors showing team member activity, instant sync across devices, and @mention notifications. Multiple users can edit the same page simultaneously with changes appearing immediately. Notion also offers commenting, task assignments, and revision history for distributed team workflows.

Format: Question heading → Concise answer (40-60 words) → Supporting details (optional 100-150 word expansion)

"Structure your content so AI can easily pull from it. Use clear headings that are actual questions. Add FAQ sections."

r/SEO, Reddit Thread

Architecture Component 3: Table-Based Feature Comparisons

Convert prose comparisons into structured tables for AI extraction.

Traditional Format (Paragraph):

"Asana offers Slack integration with task notifications and project updates, while Monday.com provides deeper Slack sync including status changes and deadline reminders..."

AEO-Optimized Format (Table):