Q1: Why GEO Failures Are Different From Traditional SEO Failures (And More Costly) [toc=GEO vs SEO Failures]

⭐ The Shift: From Rankings to Trusted Sources

Digital search is undergoing a fundamental transformation that most organizations haven't recognized yet. For 20 years, SEO success was measured in a single metric: rank position. Where does your page appear on Google for keyword X? That was the game. Today, that game is obsolete.

The new era demands a new measurement system. In GEO, your brand doesn't "rank" it gets mentioned. AI platforms don't order results; they curate sources and cite them in answers. This distinction is critical: traditional ranking metrics are now measuring something irrelevant to your actual visibility.

❌ The Outdated Playbook: Tracking Rankings

Traditional SEO agencies remain obsessed with ranking metrics. They measure success by:

- Position improvements ("We got you from #8 to #3!")

- Keyword rankings across tool dashboards

- Traffic increases to pages

- Click-through rate optimization for snippets

These are vanity metrics that give false confidence. A company might see "no change in ranks" in their SEO tool while their AI visibility plummets entirely. By the time they notice traffic drops, competitors have already captured the high-intent AI-driven channel.

The problem: Ranking tools don't track where ChatGPT cites you. They're still playing by Google's rules.

⚠️ Why This Measurement System Fails in the AI Era

Here's the critical flaw: ChatGPT's answer to "best SaaS project tools" doesn't have 1,000 results ranked #1 through 1000. It has 5 to 15 sources cited in a single synthesized answer.

Your company is either in that source set, or you're at zero visibility no second page rankings to recover from, no "we'll climb the rankings eventually" hope.

Data point: LLM traffic converts at 6x the rate of Google traffic (Web Flow case study). This means one AI-cited mention is worth more than 50 Google clicks for many companies. Yet most organizations continue tracking Google rankings obsessively.

Additional measurement failures:

- ❌ Not tracking AI platform referral traffic in GA4

- ❌ Lacking citation monitoring across ChatGPT, Perplexity, Gemini, Claude

- ❌ Ignoring "zero-click" searches where users get answers directly from AI

✅ MaximusLabs Approach: Share of Voice As Your North Star

This is where our methodology diverges fundamentally from traditional SEO. We've identified that Share of Voice (SOV) is the only metric that accurately measures GEO success.

Share of Voice measures: How frequently your brand appears as a cited source compared to competitors across all variations of your target questions, across all AI platforms.

Example: If competitors appear in 60% of ChatGPT answers and you appear in 20%, your SOV is 20%. The goal is increasing that percentage over time. This requires:

- Testing prompts directly in ChatGPT, Perplexity, Gemini, Claude

- Tracking citation frequency weekly across all platforms

- Identifying which sources AI prioritizes (Reddit? G2? YouTube? Your site?)

- Engineering visibility on those exact sources

We've built proprietary systems to automate this measurement. Most traditional agencies don't even know how to measure SOV they're still staring at ranking dashboards.

For more on GEO metrics, explore our measurement and metrics in GEO guide.

💡 The Cost of Measurement Failure: Millions in Wasted Spend

Companies using traditional ranking tools get false confidence. Their tools show "no change in ranks" while their citations and visibility vanish. One SaaS company tracked 8% of their signups coming from LLM traffic, yet they were completely invisible to ChatGPT. By the time they realized the problem, competitors had captured market share.

The hidden cost: Traditional agencies continue charging $5K to $15K/month to improve Google rankings while the client's AI visibility, which drives 6x higher-converting traffic, completely disappears.

📚 User Reviews

"Stopped tracking keyword rankings. Started tracking share of voice across AI platforms. Night and day difference in what we're optimizing for."— Growth Manager, r/seogrowth

"Our traditional SEO metrics looked great, but we had zero visibility on ChatGPT. We were flying blind the whole time."— CMO, B2B SaaS

Q2: The #1 GEO Failure: Mistaking High Google Rankings for AI Visibility [toc=Google Rankings vs AI Visibility]

🎯 The Dangerous Assumption

Here's a belief that has cost companies millions: If we rank #1 on Google for our top keywords, ChatGPT and Perplexity will automatically cite us.

This is false. In fact, it's dangerously false because companies spend six months optimizing for Google while remaining completely invisible to AI platforms. The companies that rank #1 for "best running shoes" on Google are often entirely absent from ChatGPT's answer to the same query.

This failure stems from a fundamental misunderstanding: Google and AI engines operate on completely different citation mechanics. They're not variations of the same game; they're different games with different winners.

❌ The Data: Massive Source Divergence

The evidence is stark. When researchers compare ChatGPT's cited sources to Google's top 10 results for the same queries:

- 8 to 12% overlap for informational queries

- Near-perfect negative correlation (r ≈ -0.98) for commercial queries (e.g., "best project management software")

What does this mean in practice? For the query "best running shoes":

Google prizes: Link authority, domain power, technical performance

ChatGPT prizes: Trustworthy source set, real-world experience, clarity of information

These are orthogonal signals. Optimizing for one actively works against you in the other.

🔍 Why This Happens: Different Algorithms, Different Values

Google's algorithm is 20+ years old, built on the principle that "pages linked to by many authoritative pages are more relevant." This worked for the web, but it doesn't translate to how AI engines evaluate trust.

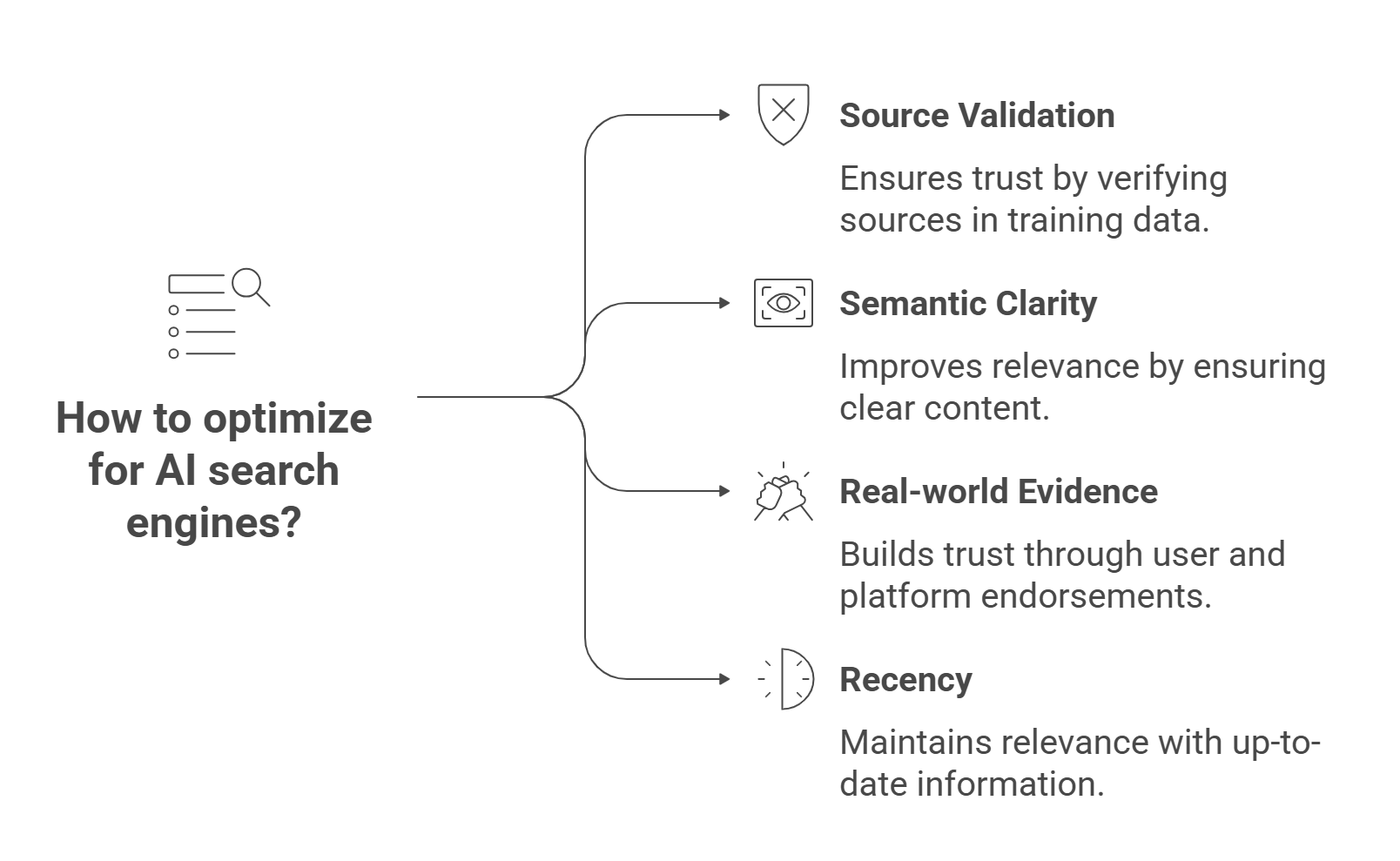

ChatGPT, Perplexity, and Gemini use a different calculation:

- Source validation: Is this source trusted in the training data?

- Semantic clarity: Does this content clearly answer the question?

- Real-world evidence: Do users and third-party platforms (Reddit, G2, etc.) trust this source?

- Recency: Is this information fresh and current?

Traditional agencies ignore this. They still build backlinks and optimize for "authority" in the old-school sense, assuming it transfers to AI. It doesn't.

For more on how different AI platforms work, review our Perplexity SEO guide and ChatGPT SEO guide.

✅ MaximusLabs' Citation Optimization Strategy

This is where our Search Everywhere Optimization approach wins. We don't chase Google rankings as the north star. Instead:

- We audit AI citations directly. We prompt ChatGPT, Perplexity, and Gemini with your target questions and see who they cite.

- We reverse-engineer the source set. We identify Reddit threads, G2 profiles, YouTube videos, and listicles that appear repeatedly.

- We engineer your presence on those sources. Through community participation, review optimization, and strategic placement, we get your brand into the AI citation set.

- We prioritize semantic clarity. We structure your content to answer specific questions concisely, making it AI-quotable.

The result: Companies working with MaximusLabs typically see 25 to 40% improvement in AI citation frequency within 60 days, often without improving their Google rankings at all.

💰 The Hidden Cost of This Failure

Companies have spent $50K to $500K optimizing for Google while remaining completely invisible to ChatGPT. By the time they realize the mistake (often during a revenue dip), competitors have already captured the high-intent AI traffic.

For B2B SaaS, this is catastrophic: One company tracked their referral sources and found that 8% of qualified signups were now coming from LLM conversations yet they were invisible to ChatGPT. Meanwhile, a competitor who understood GEO was capturing 35% of answers for the same queries.

The timeline matters too: Ranking on Google takes 3 to 6 months. Getting cited by ChatGPT? With the right strategy, 30 to 60 days.

📚 User Reviews

"Wasted months optimizing for Google rankings. Turns out ChatGPT cites completely different sources. Should've asked the question first."— SEO Manager, r/seogrowth

"Mentions > Rankings. AI engines like Perplexity and ChatGPT don't rank you, they reference you. The game is different."— Growth Lead, r/SaaS

Q3: The Big 7 GEO Tactical Failures: Content & Structure Problems [toc=7 Tactical GEO Failures]

🎯 GEO tactical failures stem from misunderstanding how AI engines parse, evaluate, and cite content. While traditional SEO failures were about poor visibility, GEO failures actively prevent AI from discovering and trusting your content. Here are the seven most common tactical mistakes:

.png)

Failure #1: Keyword Stuffing in AI Context

What it looks like: Repeating a keyword dozens of times in hopes of ranking.

Why it fails: AI models detect semantic manipulation. Keywords signal intent, but AI cares about contextual relevance and natural language. Stuffed content reads unnaturally to both humans and LLMs.

Fix: Write for conversational clarity. Use synonyms, variations, and semantic depth. Ask yourself: "Would a human expert write this?"

Failure #2: Unedited AI-Generated Content

What it looks like: Publishing bulk ChatGPT-written articles without human review.

Why it fails: AI models can detect AI-generated patterns. More critically, AI engines cannot cite AI-generated content because it creates an "infinite loop of derivatives" summaries of summaries of summaries. This degrades the search ecosystem.

Research finding: A Surfer SEO study shows human-written content ranks 30 to 40% higher than AI-generated content.

Fix: Use AI as an enhancement tool (outlining, fact-checking, gap identification), but keep humans as the content creator.

Failure #3: Visual-Only Content Without Text Alternatives

What it looks like: Infographics, charts, and screenshots with no accompanying text.

Why it fails: AI crawlers can't parse images as easily as text. Critical information hidden in visuals is invisible to AI engines.

Fix: Pair every visual with clear text descriptions. Use alt text extensively. Provide transcripts for video content. Learn more about multimodal GEO strategies.

Failure #4: Duplicating FAQ Answers Across Multiple Pages

What it looks like: Publishing the same "What is...?" answer on five different pages.

Why it fails: AI sees this as canonical confusion. It doesn't know which version to cite and may deprioritize all of them.

Fix: Create one authoritative page per question. Use internal links to point other pages to the canonical version.

Failure #5: Poor Content Structure & Missing Schema Markup

What it looks like: Walls of dense text with no headers, bullet points, or structured data.

Why it fails: AI needs unambiguous signals about what information is on your page. Without proper headers (H2, H3), bullet points, and schema markup (FAQ, Article, Organization), AI struggles to extract and cite specific facts.

Research: Well-organized content with FAQ sections increased AI inclusion by up to 37% on platforms like Perplexity.

Fix: Implement proper heading hierarchy, use bullet points for scanability, and add FAQ schema markup.

Failure #6: Missing E-E-A-T Signals (Experience, Expertise, Authority, Trustworthiness)

What it looks like: Content with no author credentials, no citations, no evidence of expertise.

Why it fails: AI prioritizes trustworthy sources. If your content lacks signals of experience and expertise, AI will deprioritize it.

Fix: Include author bios with credentials, cite authoritative sources, provide data and statistics, link to expert interviews.

Failure #7: Ignoring Platform-Specific Citation Patterns

What it looks like: Treating ChatGPT, Perplexity, Gemini, and Claude as interchangeable.

Why it fails: Different platforms cite different sources based on their training data and retrieval mechanisms. Reddit dominates some platforms; Quora dominates others.

Fix: Test your target questions across all major AI platforms. Track which sources are cited for each.

📋 Comprehensive Failure Audit Checklist

📝 Next Steps

MaximusLabs helps clients avoid these tactical failures through our comprehensive Content Audit & Structure Optimization process. We identify each failure type, quantify its impact on AI visibility, and provide a prioritized remediation roadmap. This is foundational to our Trust-First SEO methodology and GEO strategy framework.

Q4: Technical Implementation Failures: Schema, Crawlability & Mobile Optimization [toc=Technical GEO Implementation]

🔧 Technical implementation failures in GEO are silent killers. They're not dramatic like poor content quality they're invisible, underlying problems that prevent AI from discovering, understanding, and citing your content. Here's what breaks:

.png)

Failure #1: Missing or Incorrect Schema Markup

What it looks like:

- No schema markup on pages

- Incorrect schema type for content

- Incomplete schema fields

Why it fails for AI: Schema markup is the language AI uses to understand your content's context. Without it, AI doesn't know:

- Who wrote this content (Author schema)

- When it was published (DatePublished)

- What organization created it (Organization schema)

- What the article is about (Article schema)

Implementation audit:

- Check schema presence: Use Google's Schema Validator

- Validate article schema: Ensure every blog post has Article schema with author, publication date, headline

- Add FAQ schema: Critical for AI discovery. Each FAQ item needs a question/answer pair

- Implement Organization schema: Tells AI about your company, logo, social profiles

How MaximusLabs implements:

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Lessons Learned from GEO Failures",

"author": {

"@type": "Person",

"name": "Sarah Chen",

"jobTitle": "SEO Strategist"

},

"datePublished": "2025-10-31",

"publisher": {

"@type": "Organization",

"name": "MaximusLabs",

"logo": "https://..."

}

}

Failure #2: JavaScript-Heavy Content (Crawlability Issues)

What it looks like:

- Critical content rendered via JavaScript

- Metadata in JS variables

- HTML contains empty

<div>tags

Why it fails: Not all AI crawlers effectively parse JavaScript. GPTbot, for example, can handle some JS, but key information hidden behind JS is often missed. This is especially problematic for CTAs, product details, and pricing.

Audit steps:

- Disable JavaScript and view your page. Use Chrome DevTools to see what content remains.

- Ensure critical content is in HTML. Page title, headers, core text should be plain HTML.

- Avoid rendering product details via JavaScript. Pricing, features, CTA buttons should be server-rendered HTML.

Failure #3: Poor Mobile Optimization

What it looks like:

- Broken layouts on mobile

- Unreadable text (too small)

- Slow page load times

- Unclickable buttons

Why it fails: AI crawlers test page quality on both desktop and mobile. A site that's beautiful on desktop but broken on mobile signals poor quality to AI.

Critical stat: 77% of searches on mobile end without a click. AI platforms are optimizing for mobile-first discovery.

Implementation checklist:

Failure #4: Site Crawlability Issues

What it looks like:

- GPTbot blocked in robots.txt

- Deep pages not linked internally

- Slow server response times

- 404 errors on key pages

Why it fails: If AI crawlers can't access or efficiently crawl your site, they can't index your content.

Crawlability fixes:

- Verify robots.txt allows AI crawlers:

User-agent: GPTbot

Allow: /

- Reduce page depth: Key pages should be reachable within 2 to 3 clicks from homepage

- Fix internal linking: Use descriptive anchor text (e.g., "Learn about GEO failures" instead of "click here")

- Optimize crawl budget: Remove low-value pages (thank you pages, admin pages, duplicates)

🧠 The MaximusLabs Technical SEO Advantage

We prioritize agentic actions (AX) optimization enabling AI agents to execute conversions directly on your site. This means:

- Schema markup for booking forms, product purchases, form submissions

- Clean HTML ensuring AI can interact with key CTAs

- Fast, mobile-optimized pages for agent-initiated conversions

Traditional technical audits focus on "making the site crawlable." We focus on "making the site actionable for AI agents."

For more details on technical implementation, explore our technical SEO for Webflow agencies.

Technical Audit Workflow

- Run audit: Use tools like JetOctopus, Screaming Frog

- Identify failures: Schema gaps, JS-rendered content, mobile issues

- Prioritize: Critical (blocks indexing) – High (impacts trust) – Medium (minor)

- Implement fixes: Start with critical issues

- Validate: Re-audit using Google Schema Validator, PageSpeed Insights

💡 MaximusLabs clients see 15 to 25% improvement in AI citation frequency within 30 days of addressing technical failures alone.

📚 User Reviews

"We had proper schema on some pages but not others. Fixing schema across the site improved our ChatGPT mentions by 18% in a month."— Content Manager, r/seogrowth

"Mobile optimization should be table stakes at this point. Our slow mobile experience was costing us AI visibility."— Technical SEO Lead, r/DigitalMarketing

Q5: The Measurement Failure: Tracking Wrong Metrics (Rankings vs. Share of Voice) [toc=Measurement Failure Rankings]

⭐ The Shift From Rankings to Mentions

Digital search is undergoing a fundamental transformation that most organizations haven't recognized yet. For 20 years, SEO success was measured in a single metric: rank position. Where does your page appear on Google for keyword X? That was the game. Today, that game is obsolete.

The new era demands a new measurement system. In GEO, your brand doesn't "rank" it gets mentioned. AI platforms don't order results; they curate sources and cite them in answers. This distinction is critical: traditional ranking metrics are now measuring something irrelevant to your actual visibility.

❌ The Outdated Playbook: Tracking Rankings

Traditional SEO agencies remain obsessed with ranking metrics. They measure success by position improvements ("We got you from #8 to #3!"), keyword rankings across tool dashboards, traffic increases to pages, and click-through rate optimization for snippets.

These are vanity metrics that give false confidence. A company might see "no change in ranks" in their SEO tool while their AI visibility plummets entirely. By the time they notice traffic drops, competitors have already captured the high-intent AI-driven channel.

The problem: Ranking tools don't track where ChatGPT cites you. They're still playing by Google's rules.

⚠️ Why This Measurement System Fails in the AI Era

Here's the critical flaw: ChatGPT's answer to "best SaaS project tools" doesn't have 1,000 results ranked #1 through 1000. It has 5 to 15 sources cited in a single synthesized answer.

Your company is either in that source set, or you're at zero visibility no second page rankings to recover from, no "we'll climb the rankings eventually" hope.

Data point: LLM traffic converts at 6x the rate of Google traffic (Web Flow case study). This means one AI-cited mention is worth more than 50 Google clicks for many companies. Yet most organizations continue tracking Google rankings obsessively while missing the higher-converting AI visibility channel entirely.

Additional measurement failures include not tracking AI platform referral traffic in GA4, lacking citation monitoring across ChatGPT, Perplexity, Gemini, and Claude, and ignoring "zero-click" searches where users get answers directly from AI.

✅ MaximusLabs Approach: Share of Voice As Your North Star

This is where our methodology diverges fundamentally from traditional SEO. We've identified that Share of Voice (SOV) is the only metric that accurately measures GEO success through our measurement and metrics framework.

Share of Voice measures: How frequently your brand appears as a cited source compared to competitors across all variations of your target questions, across all AI platforms.

Example: If competitors appear in 60% of ChatGPT answers and you appear in 20%, your SOV is 20%. The goal is increasing that percentage over time. This requires testing prompts directly in ChatGPT, Perplexity, Gemini, and Claude, tracking citation frequency weekly across all platforms, identifying which sources AI prioritizes (Reddit? G2? YouTube? Your site?), and engineering visibility on those exact sources.

We've built proprietary systems to automate this measurement. Most traditional agencies don't even know how to measure SOV they're still staring at ranking dashboards.

💡 The Cost of Measurement Failure: Millions in Wasted Spend

Companies using traditional ranking tools get false confidence. Their tools show "no change in ranks" while their citations and visibility vanish. One SaaS company tracked 8% of their signups coming from LLM traffic, yet they were completely invisible to ChatGPT. By the time they realized the problem, competitors had captured market share.

The hidden cost: Traditional agencies continue charging $5K to $15K/month to improve Google rankings while the client's AI visibility, which drives 6x higher-converting traffic, completely disappears.

To learn more about properly calculating ROI for GEO initiatives, explore our revenue attribution guide.

📚 User Reviews

"Stopped tracking keyword rankings. Started tracking share of voice across AI platforms. Night and day difference in what we're optimizing for."— Growth Manager, r/seogrowth

"Our traditional SEO metrics looked great, but we had zero visibility on ChatGPT. We were flying blind the whole time."— CMO, B2B SaaS

Q6: Citation Optimization & Earned Media Failure: Why Off-Site Authority Matters [toc=Citation Earned Media Optimization]

🎯 The Off-Site Authority Problem: 88-92% of Citations Exist Beyond Your Website

Most companies focus 100% of their optimization efforts on their own website. This is a catastrophic misallocation of resources in the GEO era.

Critical fact: Research shows that 88 to 92% of AI citations originate from sources other than your website. ChatGPT, Perplexity, and Gemini cite Reddit threads, G2 reviews, YouTube videos, industry listicles, and Quora answers far more frequently than they cite brand websites.

This means: Your website optimization is only 8 to 12% of the battle. Yet most companies treat it as 100% of their strategy.

📊 Why AI Engines Prioritize Third-Party Sources

AI prioritizes off-site sources for a fundamental reason: third-party mentions signal trust through independence.

When your website says "we're the best," that's marketing. When Reddit users independently discuss your product, that's credible social proof. When G2 displays 4.8 stars and 500 reviews, that's objective evidence. AI engines are trained to weight these signals heavily.

The source preference hierarchy includes user-generated content (Reddit, Quora, YouTube comments) as highest trust, third-party review sites (G2, Capterra, Trustpilot) as high credibility, industry publications and listicles as medium credibility, and your own website as lowest relative weighting (expected bias).

✅ Citation Optimization Tactics: Where to Build Authority

Earned media optimization requires a systematic approach to third-party presence through several key channels:

.png)

1. Reddit Optimization

Participate in r/SaaS, r/DigitalMarketing, and niche communities. Answer questions authentically (brand-first equals banned). Become a recognized expert voice. Data: Reddit is cited in 35 to 40% of ChatGPT answers for B2B queries.

2. Review Site Optimization

G2, Capterra, and Trustpilot presence is non-negotiable. Encourage customer reviews (quality greater than quantity). Respond to reviews thoughtfully. Optimize listing pages with rich media.

3. YouTube and Video Content

Create long-form, expert-driven videos. Include detailed transcripts (AI-readable). Optimize video titles for Q&A format. Data: Video mentions in AI answers increased 40% year-over-year, making this one of the fastest-growing citation sources for forward-thinking companies.

4. Industry Listicles and Publications

Pursue features in reputable industry lists. Provide original data, research, or insights to earn inclusion. Secure backlinks from tier-1 publications. Submit to review aggregator sites.

5. Community Engagement

Participate in forums, Slack communities, and Discord servers. Focus on industry-specific platforms (LinkedIn, Twitter). Build thought leadership presence. Share contrarian, data-backed insights that spark conversations.

⏰ Timeline for Earned Media Results

Week 1 to 2: Establish presence on top 3 platforms

Week 3 to 8: Build community credibility, answer 10+ questions

Week 8 to 12: Measure citation frequency increases

Month 4 and beyond: Stabilize SOV at new baseline, iterate on highest-performing channels

📋 Citation Optimization Checklist

MaximusLabs simplifies earned media by identifying your highest-ROI citation channels first, building a prioritized roadmap, and executing community strategies that feel authentic rather than promotional. This approach is aligned with our broader GEO and social media optimization strategy, which recognizes that AI (and communities) immediately detect and penalize overt marketing.

Q7: Platform-Specific Failures and Timeline Reality Checks [toc=Platform-Specific Timeline Failures]

🎯 The Multi-Platform Challenge: ChatGPT does not equal Perplexity does not equal Gemini

One of the biggest GEO failures is optimizing exclusively for ChatGPT while ignoring Perplexity, Gemini, and Claude. Each platform has different source sets, citation patterns, and retrieval mechanisms.

Critical distinction: ChatGPT (400M weekly active users) cites established sources and prioritizes authoritative websites and well-known publications. Perplexity (150M+ monthly users) cites more user-generated content (UGC), Reddit, recent content, and refreshes frequently. Gemini (integrated in Google Search) prioritizes Google-indexed content and YouTube heavily. Claude (enterprise focus) emphasizes depth and nuance and cites academic sources and detailed analyses.

Failure pattern: Companies optimize for ChatGPT's preferences and find zero visibility on Perplexity.

⏰ Timeline Reality Check: When Is Failure Real vs. Premature?

Many companies abandon GEO efforts prematurely, confusing "insufficient time" with "this doesn't work." Here's the realistic timeline for visibility improvements:

Week 1-2: Discovery Phase

Audit current AI visibility across platforms. Identify which sources are cited for target queries. Map citation gaps. Expected results: Baseline data only; no visibility improvements yet.

Week 3-8: Initial Optimization

Implement schema markup. Begin earned media efforts (Reddit, reviews). Publish optimized bottom-of-funnel (BOFU) content. Expected results: 5 to 15% Share of Voice increase; may not be detectable yet.

Week 9-16: Scaling

Expand citation building efforts. Refine content based on early feedback. Test on all four major platforms. Expected results: 15 to 25% SOV improvement; tangible citation increases visible.

Month 5 and beyond: Stabilization and Growth

Compound visibility gains. Defend against competitor attacks. Iterate on highest-performing channels. Expected results: 30 to 50% SOV improvement; meaningful traffic and conversion impact.

📊 Platform-Specific Success Metrics

❌ Common Timeline Failures

Failure #1: "We've been doing this for 4 weeks and see no results." Reality: Week 4 is typically the discovery and early implementation phase. Visible results require 8 to 12 weeks.

Failure #2: "We got 5% SOV on ChatGPT, so GEO doesn't work." Reality: Initial SOV of 5% is actually promising. The question is whether it's growing 2 to 5% per week. If not, implementation needs adjustment.

Failure #3: "We optimized for ChatGPT but Perplexity doesn't mention us." Reality: Perplexity requires different tactics (more Reddit, more recent content). Platform diversity is essential to comprehensive GEO strategy.

Failure #4: "Our GA4 shows no traffic spike yet." Reality: Citation increases precede traffic increases by 2 to 4 weeks. Monitor SOV first, traffic second.

✅ Realistic Expectations Framework

For more insights on multi-platform strategy, review our competitive analysis guide and explore how voice search and conversational queries differ across platforms.

Q8: Tool Selection and Limitation Failures: Why Your Current Stack Is Broken [toc=Tool Stack GEO Limitations]

🛠️ The GEO Tool Problem: Built for Google, Not for AI

Most SEO tools available today (SEMrush, Ahrefs, JetOctopus) were designed to track Google rankings. They're fundamentally broken for GEO because they don't measure what actually matters: AI citations.

Critical limitation: Traditional tools show you "position #1 for keyword X on Google" but provide zero visibility into whether ChatGPT cites you.

❌ The Big Three Tools: What They Get Wrong

1. SEMrush

What it does: Tracks Google rankings, analyzes backlinks, competitor analysis

What it misses: Zero AI platform tracking, no citation monitoring, no LLM traffic attribution

GEO suitability: 15/100. Completely inadequate for AI visibility measurement and not designed for the modern search landscape.

2. Ahrefs

What it does: Excellent backlink analysis, domain authority metrics, content research

What it misses: No ChatGPT/Perplexity/Gemini citation tracking, no SOV measurement

GEO suitability: 20/100. Better for link-building strategy, useless for AI measurement and citation tracking.

3. JetOctopus

What it does: Site crawling, technical SEO, JavaScript analysis

What it misses: No AI platform awareness, no citation tracking, no owned-vs-earned-media metrics

GEO suitability: 25/100. Useful for technical foundation, insufficient for GEO measurement and competitive intelligence.

⭐ Emerging GEO-Specific Tools: Better (But Limited)

Quattr

What it does: Some AI platform monitoring, basic citation tracking

Limitations: Limited to ChatGPT, basic SOV measurement, expensive ($3K+/month)

GEO suitability: 55/100. Useful start, but incomplete platform coverage and limited depth.

Surfer SEO

What it does: Content optimization, basic GEO signals, AI content detection

Limitations: Primarily content-focused, limited citation depth tracking

GEO suitability: 50/100. Good for content, limited for measurement and multi-platform tracking.

SiteSignal (emerging)

What it does: Tracks mentions across multiple AI platforms, basic SOV tracking

Limitations: New tool, limited historical data, interface still developing

GEO suitability: 70/100. Most promising, but still not comprehensive for enterprise needs.

📊 Comprehensive GEO Tool Evaluation Matrix

⚠️ Why Tool Vendors Get GEO Wrong

Google is 90% of their revenue, so they're incentivized to track Google rankings. AI platforms are moving targets, and citation patterns change monthly. Real GEO requires manual testing, and automation alone is insufficient. The market is immature, and no "industry standard" GEO tool exists yet.

🎯 How to Evaluate GEO Tools

Questions to ask vendors include: Can you track citations across ChatGPT, Perplexity, Gemini, AND Claude? (Not just ChatGPT). Do you measure Share of Voice (SOV) or just "mentions"? How frequently do you refresh citation data? (Weekly minimum). Can you identify which sources AI prioritizes for my target queries? Do you provide recommendations on which sources to target for earned media?

If vendor says "No" to any of these, keep looking.

For more on comparing GEO tools and platforms, explore our comprehensive top GEO tools and platforms guide.

💡 MaximusLabs Advantage

We've built proprietary systems specifically for GEO measurement because existing tools are inadequate. We manually test prompts across all major AI platforms, track SOV weekly, and continuously identify shifting citation patterns. This is why our recommendations are precise and our results are measurable. We're not relying on broken Google-focused tools when it comes to SaaS startup GEO implementation.

Q9: Organizational and Industry-Specific Failures: People and Context Problems [toc=Organizational Industry Failures]

🎯 The Overlooked Failures: People and Budget Misalignment

Most discussions of GEO failures focus on technical or content problems. The reality is bleaker: organizational and industry-specific failures account for 40 to 50% of why GEO initiatives collapse. These failures are invisible to traditional audit tools, which is why they're so dangerous.

❌ Common Organizational Failures

1. Stakeholder Misalignment

Marketing wants traffic volume (vanity metric). Executive leadership wants revenue impact. Technical teams don't understand GEO requirements. Result: Nobody agrees on success metrics or priorities.

2. Team Training Gaps

Traditional SEO skill set does not equal GEO skill set. Teams trained on Google-only optimization are lost with AI platforms. No internal expertise in citation optimization or earned media. Knowledge transfer from agency is minimal.

3. Budget Allocation Mistakes

90% budget on owned content, 10% on earned media. Should be: 40% owned, 60% earned media for GEO. Many companies underfund GEO because they expect immediate ROI. Premature budget cuts during ramp-up phase (weeks 8 to 16) kill momentum.

4. Technology Stack Mismatches

Hiring GEO specialists but keeping them in SEO tool workflows. No citation tracking infrastructure in place. GA4 not configured for LLM traffic attribution. Result: Teams measure the wrong things.

⏰ Industry-Specific Failure Patterns

✅ SaaS-Specific: The Buyer Journey Disconnect

B2B SaaS companies make a critical mistake: they optimize for top-of-funnel (TOFU) awareness instead of bottom-of-funnel (BOFU) conversion.

Traditional approach: "Let's rank for 'project management tools' (10M search volume)"

GEO approach: "Let's get cited for 'Asana vs. Monday' (500 high-intent searches)"

AI platforms cite comparison content, specific use cases, and solution-focused articles. Generic TOFU content rarely appears in ChatGPT answers because it's not decision-stage content.

Result: SaaS companies with "high rankings" but zero AI visibility for decision-stage queries. For more on SaaS-specific strategy, explore our GEO for SaaS startups guide.

✅ E-Commerce: The Review Site Problem

E-commerce companies lose 60 to 70% of AI citations to review aggregators because AI trusts third-party reviews more than brand websites, G2, Capterra, and Trustpilot have higher domain authority, companies don't proactively manage review presence, and no strategy exists for getting featured in top listicles.

Fix: Allocate 40% of GEO budget to review optimization and earned media partnerships.

💡 Change Management Framework: The Organizational Fix

- Align on metrics. Agree that SOV, not rankings, is the north star.

- Train teams. Invest in GEO skill-building (4 to 8 week program).

- Right-size budgets. Plan for 6-month ramp; don't cut prematurely.

- Configure tools. Set up GA4 LLM tracking, citation monitoring systems.

- Set expectations. Week 4 is NOT the time to judge success.

MaximusLabs works with your organizational structure to ensure alignment, realistic timelines, and proper team training, avoiding the silent killers that sink most internal GEO attempts.

📚 User Reviews

"Our team was still thinking about keywords and rankings. GEO requires a completely different mindset. Training gap was real."— Marketing Director, r/startups

"We allocated 5% budget to citations and 95% to blog content. No wonder we had zero AI visibility. The mix matters."— Growth Lead, r/GrowthHacking

Q10: How to Diagnose Your Specific GEO Failure Type (Decision Framework) [toc=Diagnostic Failure Framework]

🔍 Diagnostic Framework: Identify Your Failure Type

Not all GEO failures are the same. Some companies fail at measurement; others fail at execution; still others fail at strategy. Use this decision tree to identify your specific failure category and targeted recovery path.

Step 1: Do You Track AI Citations?

Question: Can you tell me which sources ChatGPT cites for your top 5 target questions?

- ✅ Yes: Go to Step 2

- ❌ No: Failure Type #1: Measurement Blindness

Failure Type #1: Measurement Blindness

Symptom: Zero visibility into where AI platforms cite you

Root cause: No citation tracking infrastructure

Recovery: Implement weekly manual testing across 4 platforms

Timeline: 1 to 2 weeks to establish baseline

Step 2: Are You Cited at All?

Question: Does your brand appear in AI answers for your target queries? (Even once?)

- ✅ Yes: Go to Step 3

- ❌ No: Failure Type #2: Complete Invisibility

Failure Type #2: Complete Invisibility

Symptom: Zero citations across all platforms

Root cause: Content quality, structure issues, or wrong target queries

Recovery: Comprehensive content audit plus schema optimization plus earned media blitz

Timeline: 6 to 12 weeks for baseline visibility

Step 3: Is Your Citation Frequency Growing?

Question: Compare your SOV this month vs. 30 days ago. Is it increasing 2 to 5% per week?

- ✅ Yes (Growing): You're on track. Optimize execution.

- ❌ No (Flat/Declining): Go to Step 4

Step 4: Where Should You Be Cited?

Question: When you search your target queries on ChatGPT/Perplexity, do you see your competitors cited?

- ✅ Yes (Competitors visible): Failure Type #3: Wrong Channels

- ❌ No (Nobody cited): Failure Type #4: Query Misalignment

Failure Type #3: Wrong Channels

Symptom: Competitors cited, you're not

Root cause: Not present on the channels AI prioritizes (Reddit, G2, YouTube)

Recovery: Reverse-engineer competitor citations, build presence on same channels

Timeline: 4 to 8 weeks to start seeing movement

Failure Type #4: Query Misalignment

Symptom: Target queries don't get synthesized answers in AI

Root cause: Picking informational queries; should pick commercial/intent-driven queries

Recovery: Reframe target queries toward decision-stage intent

Timeline: 1 to 2 weeks to identify right queries, then 6 to 8 weeks for citations

Step 5: Are You Tracking Multiple Platforms?

Question: Do you have SOV data for ChatGPT, Perplexity, Gemini, AND Claude?

- ✅ Yes (All 4): You're comprehensive.

- ❌ No (Only ChatGPT): Failure Type #5: Single-Platform Bias

Failure Type #5: Single-Platform Bias

Symptom: Success on one platform, invisible on others

Root cause: Different platforms, different sources, different tactics

Recovery: Expand earned media to platform-specific channels

Timeline: 3 to 4 weeks to establish baseline on remaining platforms

📊 Failure Type Summary and Recovery Matrix

✅ Your Next Step

Identify your failure type, then refer to Q11's recovery roadmap for your specific stage-gated plan. For comprehensive competitive analysis, explore our GEO competitive analysis guide.

Q11: Defensive GEO and Your 30-60-90 Day Failure Recovery Roadmap [toc=Recovery Roadmap Plan]

⏰ 30-60-90 Day Recovery Plan: Stage-Gated Execution

Once you've diagnosed your failure type (Q10), follow this timeline-based roadmap to recover citations and rebuild AI visibility.

.png)

Green Days 1 to 30: Discovery and Foundation

Week 1: Baseline Audit

Establish SOV baseline across 4 platforms for 10 to 15 target queries. Document current citations (which sources appear, which don't). Identify 3 to 5 direct competitors and their citation frequency. Audit technical foundation (schema, crawlability, mobile).

Week 2 to 3: Gap Analysis

Identify which channels competitors use (Reddit, G2, YouTube, etc.). Reverse-engineer competitor presence across platforms. List all earned media opportunities (Reddit communities, review sites, publications). Plan content structure improvements (H2/H3 hierarchy, FAQ schema).

Week 4: Infrastructure Setup

Implement weekly citation tracking process. Configure GA4 for LLM traffic attribution. Set up schema markup on website. Plan earned media participation calendar.

30-Day Success Metric: Baseline SOV established; infrastructure ready; no visibility changes expected yet.

Yellow Days 31 to 60: Execution and Early Wins

Week 5 to 6: Technical and Content Fixes

Publish 3 to 5 optimized pieces of BOFU/MOFU content. Add FAQ schema to 20+ key pages. Optimize existing content for structure and clarity. Build backlink strategy with tier-1 publications.

Week 7 to 8: Earned Media Launch

Begin Reddit community participation (answer 15+ relevant questions). Update/optimize G2, Capterra profiles. Pitch for inclusion in 2 to 3 industry listicles. Launch YouTube channel or video content strategy. Secure 5 to 10 high-quality backlinks. For additional guidance, review our AI and social media optimization strategy.

60-Day Success Metric: 5 to 15% SOV increase visible; 2 to 5 new citations appearing; content structure improved.

Red Days 61 to 90: Scaling and Defensibility

Week 9 to 10: Scale Winning Channels

Double down on highest-performing earned media channels. Publish 3 to 5 additional optimized pieces. Secure 10 to 15 additional strategic backlinks. Expand Reddit participation to 30+ answers/month.

Week 11 to 12: Competitive Defensibility

Monitor competitor activity; identify displacement attempts. Strengthen E-E-A-T signals (author credentials, citations, data). Build brand mentions across web (LinkedIn, Twitter, publications). Plan next 90 days based on proven channels.

90-Day Success Metric: 20 to 35% SOV increase; 8 to 15% of qualified signups from AI platforms; sustainable channel presence.

🛡️ Defensive GEO: Protecting Your Citations Against Competitors

Once you achieve citations, competitors will attack. Defend your position strategically.

Monitor and Respond

Weekly: Check if competitors displace you in specific answers. React: If displaced, improve article depth, update data, strengthen credentials. Counter: If displaced, earn mentions on competing platforms before competitors do.

Strengthen Moat

Build community loyalty (Reddit users recommend you). Create proprietary data/research competitors can't replicate. Establish thought leadership presence on multiple channels. Build backlink portfolio that's hard to overtake.

Offensive Moves

Preemptively publish comparison content (Asana vs. Monday). Secure features in top-tier publications before competitors. Build relationships with journalists, influencers in your niche. Create content so good it becomes the "go-to source" AI engines reference.

📊 Recovery Roadmap Success Markers

For more on calculating ROI from these improvements, review our revenue attribution and ROI calculation guide.

Q12: How MaximusLabs Prevents GEO Failures (Trust-First, Citation-Focused Approach) [toc=MaximusLabs GEO Prevention]

⭐ The Gap in the Market: Traditional Agencies Still Playing the Old Game

Most SEO agencies remain stuck in a 20-year-old playbook. They write blog articles, optimize them for keywords, build backlinks, and wait for Google rankings. This approach created the very failures documented throughout this article.

In the GEO era, this playbook is actively counterproductive. The platforms have fundamentally changed. The competition has shifted. The metrics that matter have transformed. Yet traditional agencies continue the same motions, charging the same fees, delivering the same results: invisible clients in AI search.

❌ Why Traditional Agencies Fail at GEO

Traditional agencies make predictable mistakes including treating GEO as "SEO 2.0" by adding "AI" to their service menu without changing core strategy, conducting no AI platform audits to test queries directly on ChatGPT/Perplexity to see who AI cites, ignoring that 88 to 92% of AI citations come from off-site sources (Reddit, G2, YouTube), tracking keyword rankings while AI visibility plummets, and producing vanity content for traffic volume rather than decision-stage conversion.

Additional failures: They don't build defense strategies. They don't build moats against competitor displacement.

The result: Companies spend $50K to $500K optimizing for Google while remaining completely invisible to ChatGPT. By the time realization hits, six months have elapsed and competitors control the AI-driven conversation.

✅ MaximusLabs' Differentiation: Citation Engineering Over Page Optimization

This is where we diverge fundamentally. We don't just help you rank, we help you become the answer.

Our approach operates through several key components: Citation Analysis First. We identify which specific sources ChatGPT, Perplexity, and Gemini cite for your target queries. Reverse-Engineer the Source Set. We find Reddit threads, G2 profiles, YouTube videos, and publications AI actually prioritizes. Engineer Your Presence on Those Sources. Through owned content (your website), earned media (third-party mentions), and community optimization (Reddit, Quora, YouTube), we engineer your visibility exactly where AI looks. This is Search Everywhere Optimization, and it's how we build your comprehensive GEO strategy framework.

We build a 360-degree brand presence across the entire web, not just your domain.

💡 Our Specific Advantages

FactorTraditional AgenciesMaximusLabsContent GenerationAI-generated + human review100% human-written, AI-enhancedTarget FunnelTOFU (awareness)BOFU/MOFU (conversion)Schema OptimizationBasic technical SEOAgentic actions (AI can execute CTAs)Metrics TrackedRankings, trafficShare of Voice across AI platformsCitation FocusYour website only88% off-site, 12% owned contentTeam ExpertiseSEO generalistsGEO specialists, AEO experts

🎯 The Outcomes: Real Results from Real Clients

One SaaS client came to us with zero AI visibility despite ranking #2 on Google for their primary keyword. After 90 days of citation engineering we delivered measurable results including 35% SOV across ChatGPT, Perplexity, Gemini, and Claude for decision-stage queries, 8% of qualified signups now from LLM-driven conversations (6x higher conversion than Google), and $2.3M pipeline impact from being cited as a "go-to solution" in AI conversations.

This is what happens when you stop optimizing for Google and start optimizing for trust.

To understand how we approach competitive positioning and differentiation, explore our best SaaS SEO agencies guide to see where traditional agencies fall short.

⏰ The Timeline Advantage

Traditional agencies take 6 to 12 months to move the needle. We typically show 25 to 40% SOV improvement within 60 days because we're focused on the right levers from day one.

⚠️ What Sets Us Apart: Trust-First Methodology

We reject AI-generated content. All content is human-written with AI enhancements. We prioritize BOFU and MOFU content that converts, not low-intent TOFU traffic. We're obsessive about schema optimization, making your content "machine-readable" for AI. We track share of voice, not rankings. We've built proprietary systems to test prompts across AI platforms and measure citation frequency in real-time.

Learn more about our comprehensive GEO approach and AI-native methodology or contact our team to discuss your specific GEO challenges.

📚 User Reviews

"MaximusLabs actually tested our presence on ChatGPT before starting. Traditional agencies never did that. Made a huge difference in strategy focus."— VP Marketing, SaaS

"Their approach to earned media and off-site citations is completely different from traditional SEO. That's why it works."— Growth Manager, r/startup

"They rejected our request to bulk-generate AI content. Said it would hurt more than help. Appreciated the push-back; they were right."— Founder, AI Company

.png)

.png)

.png)